Rendering high resolution content for the Academy of Sciences Planetarium’s

all-digital dome requires hundreds of render nodes and high-speed storage

within a dedicated IT infrastructure.

Planetarium Studio Powers Renders for Digital Dome Visualisations |

| TheCalifornia Academy of Sciences Morrison Planetariumhouses one of the world’s largest all-digital dome theatre screens, measuring 75 feet in diameter. When creating new films, the digital artists in the production team at the Academy’s Visualization Studio must develop and process huge amounts of scene data, compositing CG content with high-resolution photography and live action video into a format suitable for projection onto the Planetarium dome. |

|

|

IT InfrastructureA production crew of twelve plus four engineers creates and produces content for the Planetarium’s theatre shows, for the Academy’s bi-monthly Science Today documentary series, and for all of the digital exhibits inside the aquarium, natural history museum and four-story tropical rainforest. Planetarium and production engineering managerMichael Garzasaid, “Capturing live-action footage is a part of content development, but the dome screen is six times the surface area of a typical movie theatre screen, and current camera and lens systems cannot produce adequate image data to fill it. Consequently, we rely primarily on CG imagery, which requires much more time and resources to create.” |

|

|

“We use a typical visual effects toolset, which includes Maya, Houdini, NUKE and various Adobe applications like After Effects and Photoshop,” Michael said. “We were using Houdini, for example, to convert the actual 1906 earthquake data supplied to us fromLawrence Livermore National Laboratory, into visualizations for the show. We also usePipelineFXrender management software. Render Management“Our typical routine is that each artist works on two workstations - one Linux and one Mac - and an all Linux render farm. We’ve found this configuration has several benefits. It allows us to support heavy workloads because we can use the network file system for better performance. It also gives us the ability to automate some of our workflows. Furthermore, because Linux and Mac are both Unix based, we are able to use similar toolsets and don’t have to double our efforts in building systems. While Maya, Houdini and Nuke run well on Linux, the Mac OS is easier to work with, especially for Adobe software.” |

|

|

| Otters feed on sea urchins that live in in an extended kelp forest network at Point Lobos’ marine protected area. |

|

“Our storage systems couldn’t supply the aggregate bandwidth required to service the render farm, particularly during peak demand,” said Michael. “Although we had a lot of storage capacity and performance, it was spread across isolated systems. So for example, if we were working on multiple scenes stored on a single filer, the system could not keep pace with render-farm requests. Edge Filer Cluster“The only way to improve performance on our core-filer infrastructure was to distribute the load by manually moving data across multiple filers. But copying terabyte-size files takes time - moving 2TB, for example, could take two days, which pushed revision turnaround out two weeks past our target of 24 hours. It reduced our output and eventually threatened the quality of the show’s final imagery.” |

|

| Left: Bird migrations follow the seasonal abundance of food seen in this global productivity visualization. Right: Travel patterns of modern human populations occur on timescales much more rapid than the ecological systems of the natural world. |

Financial constraints limited their options. Show budgeting starts as early as two to four years ahead of production, and the final production budget on any given show is rigid. Even if compute or storage requirements change, a budget cannot be redefined mid-project. Systems from conventional VFX and entertainment storage vendors were too costly, and none gave them the ability to expand incrementally. Neither a large initial purchase nor complete replacement of all of their existing storage was feasible. They also couldn’t afford large capital outlays based on future estimates. Instead, the studio purchased a three-nodeAvere FXT 3200 Edge filer cluster. Avere was the only affordable system that would support their performance objectives, use existing storage assets, and allow incremental scaling. The Edge filer accelerates I/O to render nodes by automatically placing active data such as texture files on solid-state media. Regarding the queue bottlenecks, the Avere clustered hardware handles high-speed I/O about 400 rendering nodes, increasing responsiveness to demands from artist workstations. Its linear performance can scale to millions of operations per second and more than 100GB/sec throughput. |

|

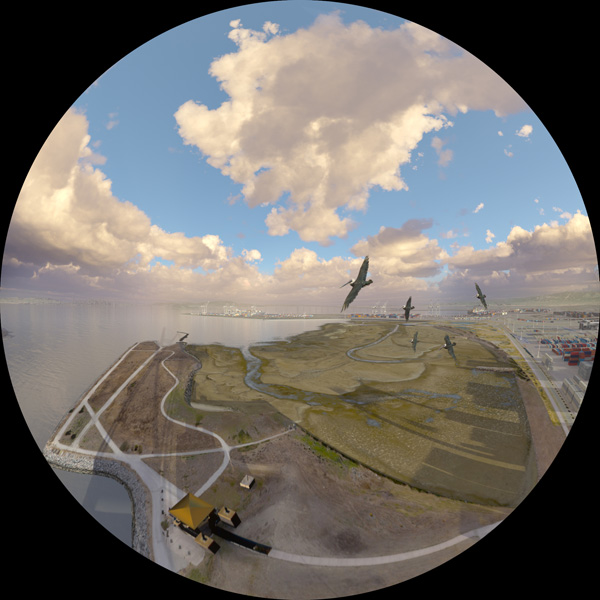

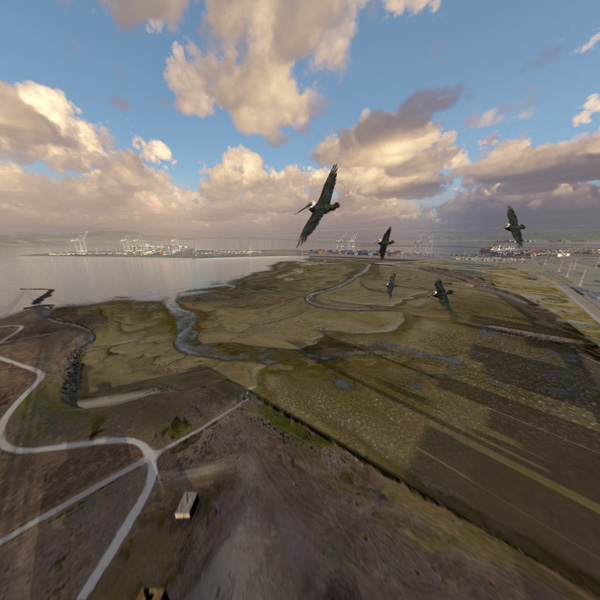

| Pelicans fly above Middle Harbor Shoreline Park, a restored habitat adjacent to the shipping Port of Oakland. |

Changing DemandOverall, these factors prevent the downtime occasionally experienced with the studio’s original core-filer infrastructure. The Avere cluster accelerates I/O performance for routine rendering processes and also makes sure adequate bandwidth is available to handle peak demand when, for example, artists are working with files up to 50MB comprising up to 50 images. This architecture has enough flexibility to adjust to an unpredictable VFX workflow and handle changes in demand as the show develops, with room for growth that they lacked before. Michael said that the Avere deployment was fairly straightforward to integrate into their production workflow and caused no disruptions. He has also quantified the results. “We expected the Avere system to produce a 2x to 5x performance improvement, but in direct-comparison testing running some of our most demanding shots, actual results sometimes showed a 10x boost. In cases where we could previously run just two or three simultaneous rendering processes, we’re now running 20 to 30 processes,” he said. |

|

|

More Detail, More Choices“What also saves costs is that we can operate at a higher resolution throughout the workflow, which helps make creative choices and clear up problems earlier in the process. It wasn’t possible before to see so many details, but now we can catch mistakes and refine the visual effects early on.” Owing to their screen size as mentioned above, each show they develop contains up to six times more data than a cinema production, and their data approximately doubles with every show. Right now, the team is producing images at 4K x 4K, that is, two high resolution cinema screens juxtaposed, and can foresee a time when the format will be 8K x 8K. |

|

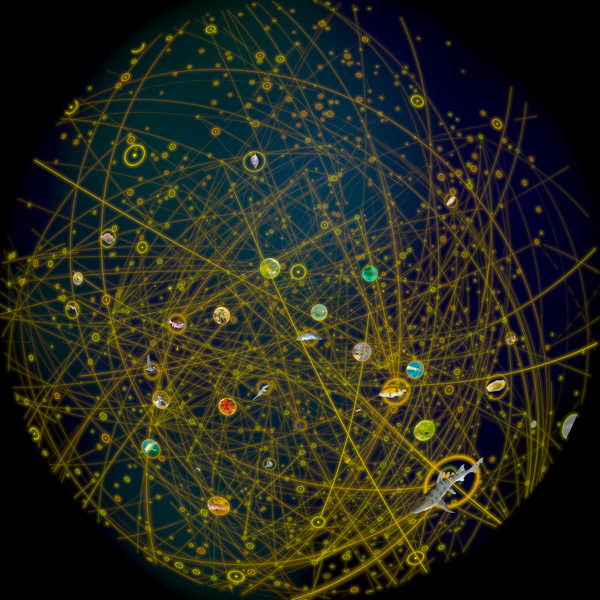

| Above and below: A data-based representation of the San Francisco Bay’s complex food web. |

|

Staying Online“We’ve always used low-cost drives, but we’re now replacing 750GB disks with high-density 2TB drives and using that capacity as nearline storage. With more capacity in our core filers we can keep more data online. By keeping all of our show data online for up to nine months past opening, it becomes easier to re-use assets for promotional purposes. Data management and administration used to cause performance hits affecting the artists, but now we can manage backup and archiving independently of production.” |

|

|

R&D OutputsThe Academy’s focus is on education, and communicating with the scientific research and education community is an important part of the Planetarium's work. Outputting their research to share in what ever formats are convenient and useful - including VR - is important to Michael's team for exposure. It's also a way to find more outlets for their work, more opportunity for feedback from different sources. |

|

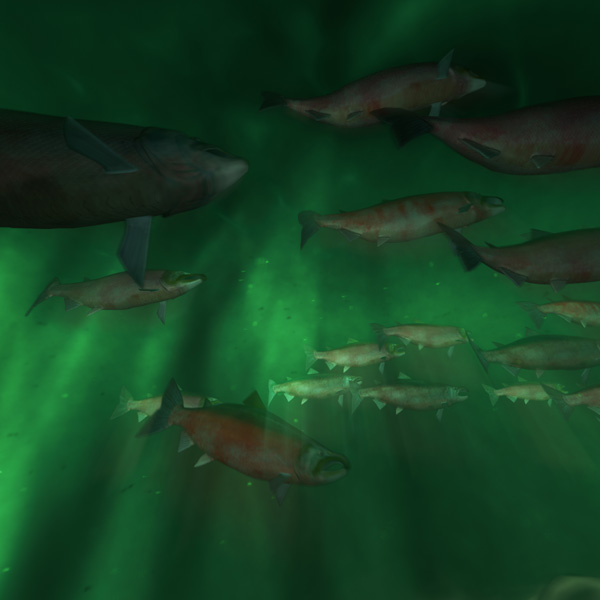

| Salmon introduce critical nutrients into the forest streams in which they spawn. |

|

“We’re still in the smaller stages of meeting our storage requirements. But because we will be increasing the number of shows we produce and also making a complete update to the Planetarium that will involve producing material at a higher resolution, we are planning two expansions in the near future. The storage system will be scaled up by adding more Avere nodes and more backend filers to increase capacity and bandwidth. This type of expansion can be done in the background with no downtime.”www.calacademy.org/exhibits/morrison-planetarium |