Senior stereo supervisor Richard Baker Talks about the challenges the

Prime Focus World team encountered as sole stereo conversion vendor

on ‘Edge of Tomorrow’.

Prime Focus Handles Stereo Conversion for Edge of Tomorrow |

|

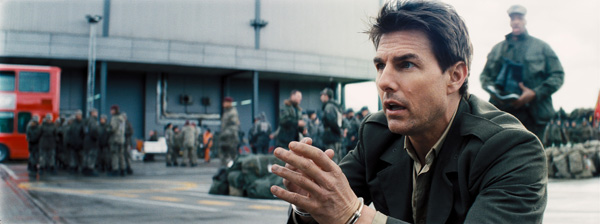

Prime Focus World was the sole stereo conversion vendor on ‘Edge of Tomorrow’.Senior stereo supervisor Richard Bakerandsenior vice president of production Matthew Bristoweworked with the production’s stereo supervisor Chris Parks. Richard led Prime Focus World’s stereo teams around the world to deliver all 2,095 shots for the film. Prime Focus Vancouver processed the VFX elements delivered by MPC in Vancouver, the London facility concentrated on the 910 shots involving head geometry, and the team in Mumbai completed the conversion of the remaining 1,185 shots. Prime Focus uses their own patented tools for stereo conversion, collectively known asView-D, that work within eyeon Fusion and Nuke. They also use tracking software and Maya. Their pipeline teams are continually developing proprietary tools to deal with new challenges they encounter on each project. In particular for this film, the Prime Focus World team was employing its geometry mapping techniques for the lead actors, Tom Cruise and Emily Blunt, and for certain key environments, to ensure consistency and accuracy of depth throughout the intense action of the story. |

|

|

Wanting to create highly sculpted, accurate faces, they chose to usefacial cyber scans, captured using a 3D laser scanning device and rigged to drive the depth for all of the key actors. Richard said, “We retain a high poly count in our models because I feel that a high level of detail is important. The basic structure is important, of course, but to really achieve accurate stereo the model needs to translate all the subtle curvatures of the face. Using the head geo is especially beneficial with head turns and other movement because all the relationships such as the cheek to nose remain accurate. Details like this make all the difference. As another example, it can be surprising how far back a person’s ears are set, a detail that may only come under scrutiny when we recreate stereo, so knowing the true position helps a lot - we can always adjust if necessary. |

|

|

The heads were animated using artist-driven tools developed in-house, which allows each artist to carry out the facial tracking and animation. This meant they didn’t need to use a separate team of artists for this stage and kept the development of the shot in one set of hands. Richard reviews all the head animations and gave guidance on the disparity required for each shot. Overall shot disparity, consistency with similar shots, lens and distance from camera are what determines the depth. |

|

|

“Among lots of factors contributing to the design of a shot, lens choice has an impact, as does the amount of action, so it’s about finding consistency in your stereo design while creating immersive, interesting 3D that compliments the integrity of what the director filmed. There are times, with a close up for example, when the choice is not to position the background too positively because that depth can distract from the character in an emotional moment. One advantage of using our tools is that all the decisions can be made in post as the edit comes together.” |

|

|

The stereo camera generation tool is part of the company’s proprietaryHybrid Stereo Pipeline. A stereo camera pair is generated from hand-sculpted disparity maps to produce a virtual rig that will work in any CG or compositing environment, allowing the VFX vendor to render CG assets with exactly the right amount of depth for a given slice of the scene. “Our Hybrid Stereo Pipeline - of which the stereo camera generation tool is a part - is essential to our process due to the difference between the linear mathematically correct stereo generated in during the visual effects process and the non-linear, creatively-driven stereo created in conversion,” Richard said. “It allows us to integrate the two in the same scene, through the generation of a virtual stereo camera pair that the VFX house can use to render the CG assets with exactly the right amount of depth for a particular portion of the scene. |

|

|

The disparity maps are tailored to produce the depth that the client is looking for in the scene. The cameras are 100 per cent accurate to the depth of the stereo image they have sculpted. Before handing off these cameras, the vendor’s CG objects are rendered through them to QC and check with the stereography department that the objects fit correctly within the converted scene. They were able to use the stereo camera generation technique somewhat differently with Nvizible on ‘Edge of Tomorrow’ than they had with Framestore on ‘Gravity’. Richard explained, “On ‘Gravity’ we were working with live CG assets due to the nature of the production and the approvals chain. The stereo camera generation tool allowed us to lock our converted scene and deliver this to Framestore with an exact virtual stereo camera pair, so that they could then finalise, render and comp their stereo CG assets into the converted scenes knowing that their volumetric properties would be exactly correct for each scene. |

|

|

“It made more sense to do it this way than to bring Nvizible into the stereo approval chain because the details of hologram visual effects are challenging to convert due to the transparencies. The full mesh needs to parallax correctly and have clean negative and positive separation through the movement of the object and shot.” Prime Focus also further developed its use ofparticle FX for 3Dmoments, adding CG exploding debris and dust to scenes, as well as using cyber scans of set environments to maintain detailed accuracy across a wide range of shots. The use of particles or added debris is designed along with the depth. Often, certain sequences such as the beach battle in ‘Edge of Tomorrow’ will be conceived early on as a scene that would enhance the immersive quality of a movie by having multiple layers of dust and debris added to it. |

|

|

“The benefit for the VFX house is that their composite can be 2D, and then we can take the layers and elements they provide for the conversion and add our own particles to enhance the 3D effect as required. Pactical particles, for example from practical explosions or flying debris, existing in the plates become still another part of the conversion process. We have tools to extract them to create depth – sometimes we will clean-plate them if they are distracting to the shot, and replace them.” www.primefocusworld.com |

| Words: Adriene Hurst Images: Courtesy of Warner Bros Pictures |