Elton John Video Productions Goes on Tour with DaVinci Resolve Studio

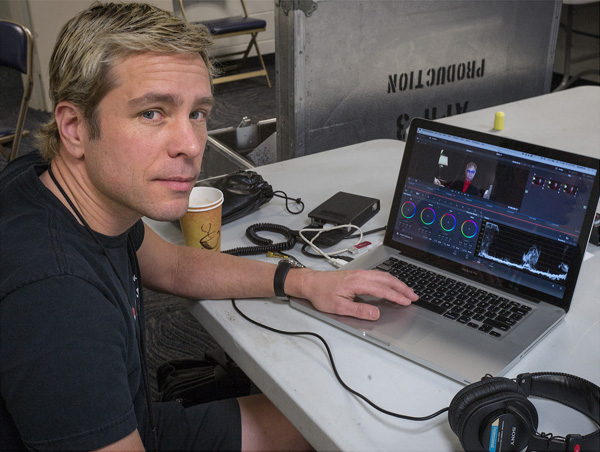

Video Director John Steer and On-tour Post Production Specialist Chris Sobchack work on production and post supporting a variety of video productions for singer/songwriter Elton John while he is on tour.

Chris Sobchack said, “While we're on the road, John Steer and I are responsible for shooting and post on video material used as content for Elton’s YouTube channel, clips for broadcast television and award shows, packages for fan clubs and VIPs, dedication videos and other purposes. It can involve archival footage, current tour footage and new footage that we, or outside video production companies, shoot, such as interviews and behind-the-scenes, and we work on DaVinci Resolve Studio in post to bring everything together, often on very tight deadlines.”

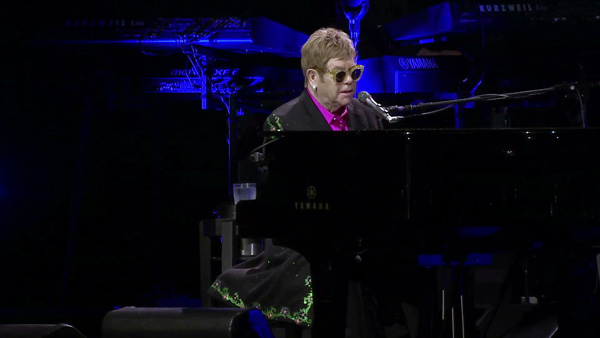

To shoot the interview and behind-the-scenes (BTS) footage on tour, their crew uses a Blackmagic Design Micro Studio Camera 4K, in conjunction with a Micro Cinema Camera, Video Assist and Video Assist 4K. HyperDeck Studio Pro and HyperDeck Studio Mini are set up to record the live performances, and MultiView 4 to monitor camera feeds. Teranex Mini and UltraStudio Express are also used to send camera signals to the video wall on stage.

The process involves taking tour footage with reference audio tracks from the sound engineer and deconstructing the footage, along with archival elements, BTS and newly shot footage, into component shots for editing, grading and audio sweetening. All of this can be done in Blackmagic DaVinci Resolve Studio.

John and Chris also have regular tour duties on top of video production work, which does not leave time for transcoding and swapping between programs. Therefore, a system with enough functionality to take them from media ingest to final output in one system is what they want.

For Chris, audio post can be as simple as compression and levelling, or as complex as working with some or all of the multi-track files the team records each night to augment the audio on a track, or go as far as creating a complete studio mix from scratch. “I may also add and keyframe audience microphones to enhance the live ambiance or use the Fairlight page in Resolve to minutely fix any visual sync issues,” he noted.

For editing, John Steer splices the archival footage, BTS and newly shot footage with the raw footage from the tour’s live shows. “I handle the offline edit, while Chris handles the online. DaVinci Resolve Studio works very well for that kind of collaboration, because we can work simultaneously by sharing files back and forth,” he said.

“I also use DaVinci Resolve Studio to make copies of the whole show in a lower resolution, so we have a backup viewing copy. We can use the software to put together everything from video idents to full songs from the show while we are on the road touring. I find it natural and easy to use, and Fairlight has made it straightfoward to sweeten the audio as well, and its functionality keeps expanding.”

The lighting as it is recorded in the video also needs attention. “During the live performances, the lighting is constantly changing and overall, the footage is darker than what’s needed for broadcast or the web because the concerts are lit for live viewing rather than for a camera. My main objective is to retain the flair of the live show’s lighting design, but also be able see Elton’s face. I also have to make the performance footage consistent with any BTS or newly shot footage,” said Chris. “In DaVinci Resolve Studio, I use gradients, vignettes on faces, HSL qualifiers and Power Windows to brighten things up and blend the widely varying colours in the shots.”

He continued, “I also reframe shots on occasion and rely on DaVinci Resolve Studio’s temporal noise reduction. Since we don’t shoot in light that is really video project-friendly, when we make the kinds of adjustments we need for broadcast, especially if it’s being up-resed for a prime time network for instance, this feature can take a shot from a zoomed in camera that was 60 yards away from the stage and make it look perfect.”

Temporal noise reduction uses knowledge of how video frames change with time to create an algorithm that can both work out which pixels have changed, and which pixels are expected to change, between frames. By comparing the two, Resolve determines which pixels are noisy and which are not, and then calculate a new value for noisy pixels based on the surrounding ones. In short, the process uses data present in the video stream to attenuate the effects of noise and improve the image.

Because multi-camera recording has not been practical on the tour, the video also has burned-in transitions. When grading, Chris picks a cut point from two shots and animates a colour transition using keyframes to make sure that the first frame of the second shot matches the last frame of the first shot. He said, “Instead of using primary wheels, I use levels and by noting down numbers I can match them using the DaVinci Resolve Mini Panel. The panel adds a tactile feel to workflows, and since it has both dedicated and soft, page-specific knobs, I can use it to dial in and drop down to exact values, which helps to match all elements very quickly.” www.blackmagicdesign.com