Synamedia and Tribal Ready partnered on the provision of video services for tribal communities, taking advantage of the cloud-based Synamedia Senza video platform.

Tribal Ready, a Native American-owned Public Benefit Corporation, is dedicated to tribal data sovereignty and digital equity. Sovereignty here refers to control over how tribal data is collected, stored and used, and carrying out these tasks in a way that is consistent with their culture and laws.

Alongside this goal is an interest in improving access to critical information, cultural resources and educational opportunities by delivering hyper-localised content and TV applications to their communities.

Until now, traditional infrastructure has limited the ability of Native American communities to connect with each other, share critical news and preserve their cultural heritage. As over six million Native Americans now live outside of reservations, the need for scalable, accessible communication tools has grown more urgent.

Tribal Ready exists to focus on connectivity-related issues, moving beyond broadband services alone to include video streaming, delivering both community-driven content and interactive applications to all screens connected to its broadband network.

Video Services for Tribal Communities

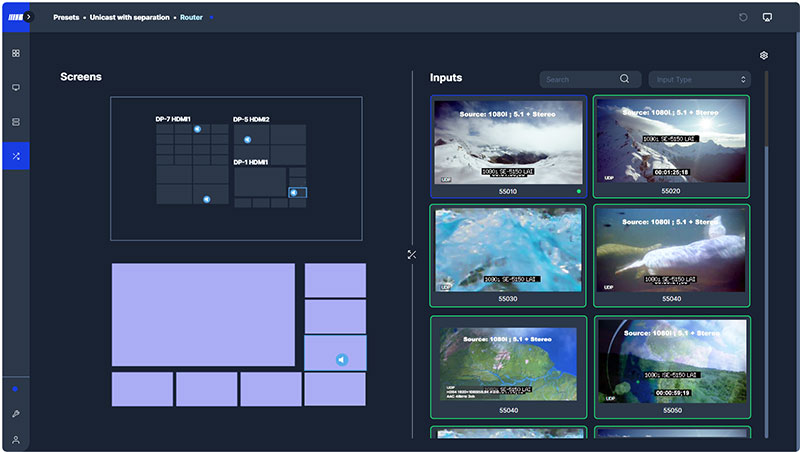

Synamedia and Tribal Ready have partnered on the provision of video services for these tribal communities. Their collaboration takes advantage of the cloud-based Synamedia Senza video platform, developed to overcome financial and technological barriers that have traditionally held back community-specific video services. By utilizing the same web systems used to run today's larger, most commonly-used platforms, Tribal Ready can use Senza to consolidate local channels, deliver hyper-localised content and make community announcements – without having to first invest in expensive hardware or rely on complex infrastructure.

Instead, Synamedia Senza delivers content to screens by moving all graphics processing to cloud-based resources and, at the consumer end, connecting to the system’s compact Cloud Connector device and a HDMI-enabled screen. This simpler, small-footprint approach opens a potential for new formats, services and operating models. Lowering operating costs makes it possible to enter new merkets that were out of reach before.

"Our work involves helping Native communities thrive by removing barriers to digital equity," said Joe Valandra, Chairman/CEO of Tribal Ready. "This partnership with Synamedia brings us one step closer to seeing that Tribal members, regardless of where they are, have access to the content and information that matter most. It's about ensuring tribes have the tools they need to stay connected, celebrate their culture and engage in the digital economy."

Central Platform for Communications

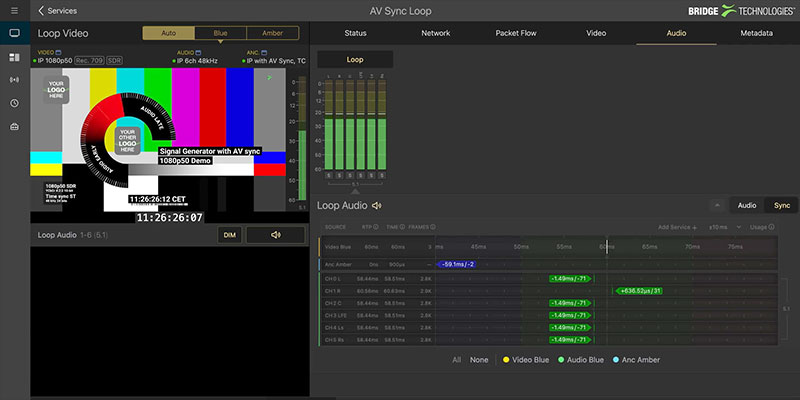

Because Senza is a SaaS, providers only need to access its resources in the cloud when necessary. Users can take advantage of new, low-latency streaming techniques and improvements to cloud infrastructure – without hardware upgrades. Rendering the UI in the cloud means accessing better performance and new features as they are available over time, and updating the interface to reflect changes, new audiences and personalisation.

Through their collaboration, Tribal Ready will be able to offer tribes a central platform for communications, from local news to educational resources or cultural programs. By doing so, it addresses the fragmentation of information across platforms and serves as a unified destination for timely, reliable updates. Moreover, the ability to run local ads on the platform is an opportunity to support small businesses, creating revenue streams that also support economic growth and self-sufficiency in tribal communities.

This partnership aligns with Tribal Ready's long-term goals to advance broadband and digital equity for Native communities. The integration of video services into Tribal Ready's wider service is part of a strategy to enhance digital infrastructure, promote workforce development and ensure that Tribes have the resources to participate in modern digital life as far as they need to. www.synamedia.com