‘Life of Pi’ was a chance for MPC in Vancouver to design two massive storms from the inside out. VFX Supervisor Guillaume Rocheron describes the team’s R&D.

EYE OF THE STORM |

| VFX supervisor Guillaume Rocheron at MPC Vancouver started to work on ‘Life of Pi’ in mid-year 2010, about six months before principle photography began in December in Taiwan. Director Ang Lee and VFX supervisor Bill Westenhofer were starting on previs, and MPC came on board at that stage tasked with two large ocean storm sequences, the digital build of a cargo ship and the creation and animation of several CG zoo animals. “The project was a good fit for MPC,” said Guillaume, “owing to our R&D with Scanline’s Flowline water simulation software and experience with character animation and CG modelling.” |

|

|

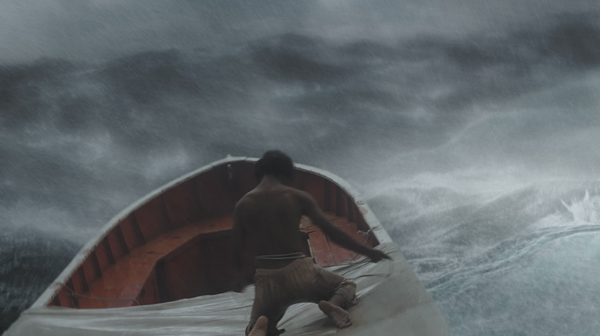

The two storm sequences, representing major turning points in the story, proved to be the most demanding. The movie was shot natively in stereo, and one of the principle challenges for MPC in terms of the quality of their work was the duration of director Ang Lee’s shots. The storms – the first involving a shipwreck and the second, the Storm of God, seen from a lifeboat on the open sea - amounted to about 15 minutes of the final film. Normally for VFX work, this amount of time would be spread over 200 to 300 shots or more. But for ‘Life of Pi’, those minutes were divided up over only about 100 shots. The entire two hour film comprised only 960 shots in total. Long ShotsIn the first part of the story, young Pi travels from India to Canada on a cargo ship The Tsimtsum with his family and the animals from his father’s zoo when a massive storm overtakes them and sinks the ship. “Ang embraced the stereo process as an opportunity and technique for immersing the audience in the action. Shooting a sequence like the shipwreck using long shots that give the audience a chance to study every detail is an unusual approach to 3D,” Guillaume said. “Typically, directors would use fast cuts and flashes of lightning in a sequence like this to generate danger and excitement. In cuts of one or two seconds we can trick the eye – it’s a normal part of our work. But here we had to make shots of at least 10 to 20 seconds work. Ang wanted to emphasise the tragedy of the sequence by showing the detail clearly. It was a bold filmmaking move, but for visual effects it also became a major challenge. “For example, we had two minute-long shots back-to-back. The first is underwater when Pi watches The Tsimtsum sinking to the ocean floor and recognizes that he is losing his family and everything he knows. In the following shot Pi resurfaces and climbs back into the lifeboat. Back in 2010, looking at the previs and knowing that it would all be in stereo, an initial priority was to raise and then maintain a consistently high level of detail across such shots. We would have nowhere to hide, especially regarding oceans and storms that need multiple simulations to create foam, white water and spray.” |

|

|

Shipwreck DesignWhen they started designing the shipwreck storm, the idea was to base each shot on real storms. As well as realism, Ang indicated that he wanted the team to treat the ocean as a character, prompting a character study. What type of storm will this be? Is it a hurricane? If so, how does a cargo ship look in a hurricane at sea? Not a lot of reference was available. Finding some footage of tankers crossing the Pacific in storms of a similar magnitude to Ang’s vision, they used this as a realistic base for measurements – 250m long waves, 15 to 18m high, 12 to 14 seconds between waves with high winds producing spray and foam along the crests and lots of white water – and built a photoreal ocean in similar conditions. “We could show this to Ang, take on his comments and suggestions and adjust the build from there,” said Guillaume. “His choices were mainly story-driven. For example at first there was too much white water in the images because he wanted the sea to look blacker and more threatening during the shipwreck. It takes place at night, with only the lights from the ship as it sinks. Ultimately our job was to strike a balance between all of the elements that make up a real storm, and what Ang was after. Balancing these two aspects took a few months of working back and forth. Wave Tank“In a way, this should be an unfilmable movie,” Guillaume said. “It all happens in the middle of the Pacific Ocean where you cannot take a film crew or the infrastructure to create a practical storm, or control a lifeboat with a tiger in it. Therefore the decision to shoot in a wave tank surrounded by blue screen was made early on, and the production engaged a theme park in Taiwan to build one of the largest tanks ever constructed for a film.” |

|

|

|

For the shipwreck, the DP Claudio Miranda, shooting in stereo with ARRI Alexa cameras, used the previs as a reference to determine the orientation and geography of the shots and where the localized light sources from The Tsimtsum would be. This way, Pi and the lifeboat lighting would then blend seamlessly once the CG ship was added to each shot. The very long shots also proved to be a challenge to shoot, especially in the tank. Above the water, the cameras were on a Spydercam rig with constantly moving waves, wind and rain. Once the live action had been shot, the detail and colour information the Alexas captured proved very useful for all of the blue screen extraction, made especially difficult in the tank storm footage because of the practical rain and mist covering the shots. For MPC, further initial challenges came in trying to create those 15m storm waves, 250m long, each larger than the whole tank. Consequently, the audience is seldom looking at the real water. Guillaume explained, “The water was useful to the actor, and to produce realistic motion in the boat. But we generally rotoscoped Pi out of the plates and digitally replaced all surrounding elements and re-animated any waves. Shots in which the camera is slightly too wide were fully CG to give us control across these shots, for which we built a very high resolution digital double of Pi. Simulation Puzzle“We also did quite a few takeovers. For example, when Pi is on the lifeboat as it drops down into the ocean, the first moment shows Pi, as captured on blue screen, before we take over into full CG to extend the motion of the boat and get it down into the ocean correctly. In the end, everything needed to look realistic and photoreal but at the same time, all details are story driven – the moment at which we take over with a CG character, how we animate the camera, or the way the ocean looks at any given moment.” |

|

|

They spent a lot of time in pre-production deciding how to simulate the level of detail required, while recognizing that art direction was critical. Oceans involve huge simulations but by definition a fluid simulation is hard to art direct and may be difficult for directors to deal with. “You launch simulations, and then change parameters to adjust the properties but you never know in advance exactly what you will get,” said Guillaume. “The artists know what isn’t working in one spot or another but simulations aren’t ideal for iterating out to a point where you can lock down the look. Nevertheless we had to produce 15 minutes of shots all based on water simulations. If Ang was going to want to change something each time we ran a new simulation, it wouldn’t be manageable. Ocean Animation Toolkit“Ang wanted to be able to control exactly where each wave would be, its size and composition. He might say, ‘I need the waves to hit the side of the ship on frame 24.’ It’s the sort of action you need to be able to build up and iterate on. So Ang wanted to find a way to animate the ocean in a detailed way, and align layers to the simulation. The R&D departments at both MPC and Scanline worked on this idea together, through the partnership we have maintained with them for the last couple of years.” They developed a new approach called refined sheets, which resulted in two major advantages. The first hinges on the fact that simulating an ocean is based on voxel grids. Traditionally the full volume of the ocean has to be simulated, from the bottom to the crest of the waves. Then the waves can be programmed to result in simulations. The refined sheets technique, however, let them take a pre-existing surface and simulate a thin blanket of voxels over it. |

|

|

Guillaume talked about the progress of the R&D work. “We started with a geometrical representation of the ocean, derived with deformers. The R&D team designed an ocean animation toolkit, based on Tessendorf deformers and Gerstner waves, that we could use to shape the geometry as waves. The tools do not produce a photoreal, simulated ocean surface at this stage but do give us a base with the correct shape. “This geometry could be passed to the animation and layout team and made a useful way for them to start animating and shaping the waves – as they would a character – to use as the underlying surface. Then we could apply our refined sheets technique on top of their work. That is, starting with their animated surface as a base, and then creating a thin blanket of voxels over it to simulate the water flow and interactions. Expressive Waves“This process made quite a difference to us. It meant we could animate the ocean, get approval from Ang, and lock the shots editorially – including camera, the ocean itself, layout and animation – without actually running any simulations. It both saved time and gave us that iterative process we needed to work alongside the director. We could animate the waves as expressively as a character, and had a chance to keyframe the waves and composition.” A second advantage was that they were only simulating the thin sheets of voxels over the ocean, instead of the entire depth. They could concentrate all computer power on simulating those two feet of water which in turn allowed them to create a very high level of detail, and also push the quality of the simulation much further. To render the ocean surface, they wrote a new iterative meshing system for the simulation, looking at the mesh and ocean based on the camera frustum, and especially at the relationship between voxels and pixels. |

|

|

|

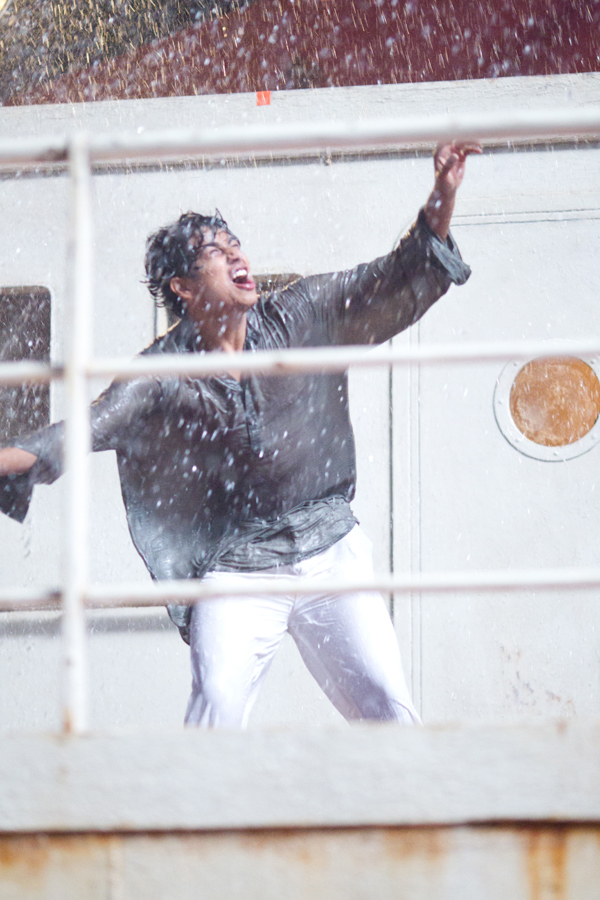

“We were never going to lose detail on the meshing of the ocean, but made sure it was fairly well optimized so we could render the very dense ocean surface with lots of detail. Then we had to render all of the point simulations for the foam and spray, which involved custom render scripts to send all of this data to the renderer,” Guillaume said. Composite ControlThey had large numbers of elements to simulate. In one shot in which Pi watches the prow of The Tsimtsum, you see a wave crashing over the front of it and lot of white water rushes up to the camera, involving the simulation of some 1.5 billion water particles. While they tried to do as much as possible before rendering often it was the only way to monitor what they were doing and so the optimization was quite important. They also implemented a full, detailed compositing workflow, breaking the work down into many layers which gave them a chance to control and reassemble all the elements accurately in the composite. “We might render an entire shot and decide one splash had to change,” he said. “The workflow allowed us to render that single element, not the entire ocean, and re-composite it into the shot.” The difference between the two storms – the shipwreck and the Storm of God – was mostly in the in looks and mood. During the shipwreck the sea was dark and menacing. In the Storm of God they had a wilder, crazy feeling, using much more white water, to match Pi’s frame of mind after 200 days at sea. It was fast moving and visually intense. The lighting was another significant difference. The second storm happens in the day time. It had more, visible storm elements and flickering lights, but the lighting scenario was based on the overcast sky, which was simpler to illuminate. “The Tsimtsum, on the other hand, sank at night, with no other light sources than the ship itself. It meant shaping and art directing the look of every shot, largely by relying on the reflections on the surface of the water. The best way to do this was raytracing. Based on area lights, it allowed us a lot of control over the shape of the waves and the looks of the reflections, with proper fall-off,” said Guillaume. |

|

The TsimtsumThe live action portions of the cargo ship Tsimtsum comprised two small 20ft x 20ft deck sets on a gimbal. Any time the audience sees Pi on the deck before he falls into the sea needed set extensions, and any time we have a wide view of the ship or Pi is down in the water and no longer in contact with the deck, the audience sees a digital asset. MPC needed to complete a high-resolution hero build because, above and below the water, the camera views the ship from every angle and at close range. The original build was based on Art Department blue prints and they also referenced LIDAR scans of the sets, texture references to match railings, doors and walls. They hired a boat for a day and sailed around Vancouver Bay shooting photo reference of thousands of ships. It was a heavy build with detailed geometry and about 800 textures, 4K and 8K, in order to cover everything, work with the camera and create water interactions. On deck with Pi, no practical water was used - it was always digital - so they had the deck set plus practical rain in the plates, only. No volumes of rushing water, no ocean, no real animals. Thus the deck shots represented some very complex composites. Guillaume said, “While our most complicated composites were those in which we only kept Pi and the little boat out at sea, in this first storm you are still only looking at Pi against a blue screen on a minimal set in a wave tank. Accurate integration of all water elements, rain layers, atmospherics, all in native stereo from start to finish, precluded any type of ‘cheat’ to the camera and required detail compositing. Stereo Sink“All plates were created and dealt with in stereo from beginning to end – no post conversion was carried out. For compositing we were using Nuke, with Ocula for vertical realignment and colour offsets. We wrote our shaders to work in a stereo workflow as well, so that we could render both eyes without re-computing everything. For us, the 3D challenge was not technical or even about design – the shots were all very well designed. |

|

“It simply came back to the length of the shots. That was what made such heavy demands on quality. In one shot we might have 30 seconds of water to simulate and then composite together perfectly in stereo - complex simulations, complex characters, plus atmospherics – all completed without intercutting. For example, that dramatic underwater shot of the Tsimtsum sinking was also a full minute long. “The production had built a deep tank to shoot the underwater plates for the shot, which show Pi in front of a blue screen. This required us to simulate the ocean surface seen from below, the crashing wave effect producing a mass of bubbles, and the murk and plankton suspended in the water. The elements also had to work together in stereo – placing Pi in the foreground with The Tsimtsum sinking in the background, meant an extent of empty stereo depth in between. We had to balance those elements carefully to integrate them. Also, how much light should reach The Tsimtsum? In a real situation it wouldn’t be much, but we had the story to tell. Pi has to be looking at something distinct to express his loss. We continued to refine and balance this shot over about a year. Tragic ZooMPC’s animation team modelled, rigged and animated about 15 of the exotic animals, travelling with Pi’s family from their zoo in India, for shots during the shipwreck, including a leopard, a giraffe, monkeys and a rhino. Led by animation supervisor Darryl Sawchuk, an important concern for the team was to build the animals with sufficient realism to express their panic and add to the general sense of chaos and tragedy in the sequence but not distract from what Pi is doing and feeling. “They are ambient characters,” said Guillaume. “To work out the choreography of their performance, we established with Ang how much the audience should pay attention to them. That is, as the audience watches, they know they are there, but they are not the priority.” Nevertheless, the team visited the zoo and gathered full video and photo reference for the animals. They also built a complete muscle rig to allow subtle, realistic actions and gave them the correct wet-fur groom. While they were ambient, not hero characters, they had to hold up to close camera scrutiny. The exception was the zebra which was a shared asset with Rhythm and Hues that MPC animated for this sequence. “As his actions drive part of the story, the zebra became our hero animal. We see him swimming into the corridor before he jumps from the ship’s railing and crashes into the lifeboat, breaking his leg and causing Pi in the lifeboat to fall into the water. This required more detailed hero animation than the rest of the animals,” said Guillame. www.moving-picture.com Words: Adriene Hurst |