David Stump ASC talks about high frame rate shooting for movies, and Qube Cinema’s CTO Rajesh Ramachandran explains HFR projection in cinemas.

Fast Frames – HFR through the Camera’s Eye |

|

After working on numerous movie and TV productions as DP, visual effects DP, VFX supervisor and stereographer, including live action and 2D to 3D conversions,David Stump ASChas been shooting projects at high frame rates for many years and is now co chair of aSMPTE study group on High Frame Ratefor digital cinema. He has won an Emmy and a Scientific and Technical Academy Award and had the opportunity to shoot for Doug Trumbull for Showscan in the 1980s, capturing to 65mm negatives at 60fps. The release of ‘The Hobbit: An Unexpected Journey’ has rekindled filmmakers’ interest in high frame rates for cinema, although David explained that HFR techniques have been used in filmmaking for quite some time, long before Showscan’s time as well. It has been in use in some earlier cinema formats, for example, including the Todd-AO films which were all shot at 30fps. Todd-AO was a high resolution film format developed in the 1950s and used for films such as ‘Oklahoma!’, ‘The Sound of Music’, ‘Patton’ and many others. Frame Rates On SetEquipment for modern cinematographers and crews is not at the heart of issues surrounding shooting HFR for cinema. Variable frame rate cameras already exist, and only a few hardware changes need to be made on set. Lighting must be taken into account because, due to the shorter exposure interval, the DP has to be aware that the resulting images will look darker to viewers. Storage requirements also increase. Shooting at 60 fps, for example, results in two and a half time the storage space traditionally required for 24 fps. Monitoring constraints can arise. ‘The Hobbit’, shot at 48 fps could use a 24 fps monitor by putting the material through a frame sorter, but to display on a conventional monitor directly from camera requires some adaptation. |

|

| Image: Courtesy of Warner Bros. Pictures |

|

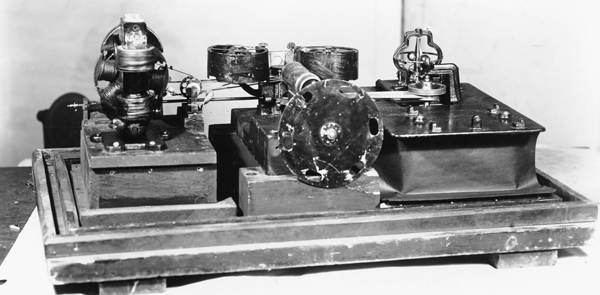

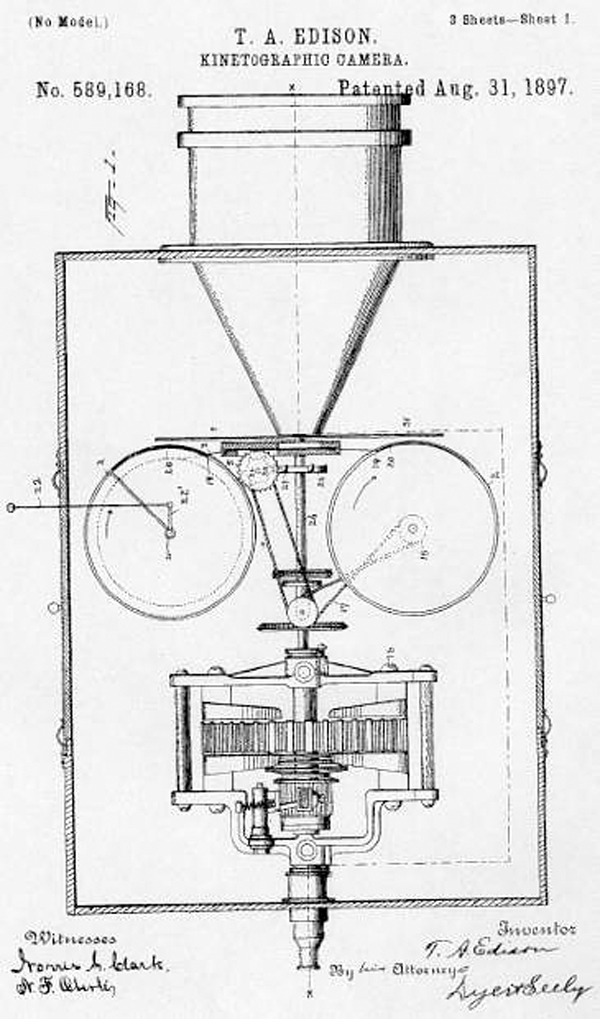

Although David doesn’t see these kinds of alterations to hardware as representing a major obstacle to a film crew, he believes that shots do need to be conceived differently when capturing a story. “The clarity at which higher frame rates render images is so much more defined that you can’t hide elements in the dark, or get away with obscuring movement in the image that you might do at a lower rate,” he said. “Regarding the relationship between resolution and frame rate, a cinematographer interested in making his footage more closely match human vision should consider certain factors involved in the way digital cinema is leaving the constraints of film behind. These are higher resolution, higher frame rate, higher bit-depth and brighter projection. These are all closely intertwined when trying to make motion images that closely approximate our vision. So far, compared with what has been achieved in the other factors, in my view the adoption of the 24p frame rate has been least sufficient in reaching that goal.” The Happy MediumThomas Edison, inventor of the kinetograph movie camera in the 1890s, needed to specify the size he wanted manufacturers to make the rolls of film it would use. He nominated 35mm fairly arbitrarily, aiming for a size that would be convenient for handling, perforating and developing. David said, “This size was about half the size of commercially available, un-perforated, 70mm rolls of Kodak film. In effect, if a photographer bought the available film and split it through the centre, the result would be 35mm film. |

|

|

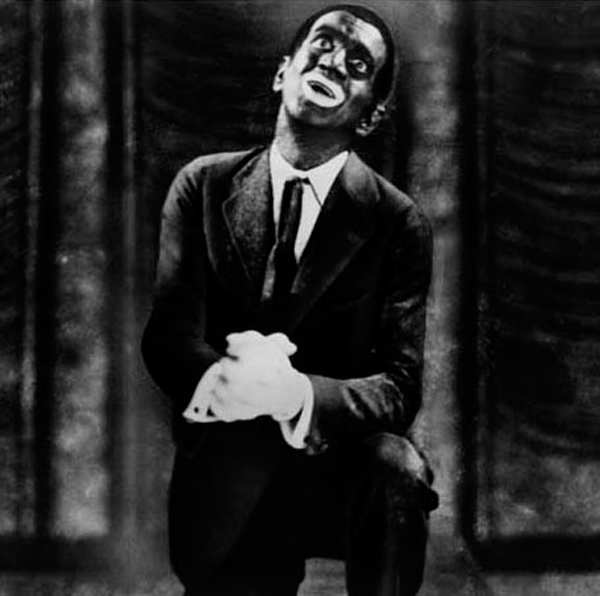

“More significantly, this also accidentally gave enough resolution to suit both the cinematographers and the viewers. Even after 100 years of shooting, DPs have never seemed to find the resolution this rate is capable of to be lacking. Edison had accidentally arrived at a medium that gave us 4K to 6K to 8K of resolution with that size, still allowing for what I call Hollywood’s margin of success. Synching Sound & Vision“That is, the movie industry generally aims to make films at the narrowest margin of success. Consequently, later on when engineers from Warner Brothers and Western Electric worked on synchronizing sound to images for ‘The Jazz Singer’, they first made a study to find the ideal frame rate at which they could automate the film. They chose 24 fps because 22 still caused flickering and other image problems, 24 fps looked perfectly fine, and 26 fps didn’t look sufficiently better to warrant paying for the extra two frames per second.” Thus, 24 fps was an economic decision, producing the narrowest margin of success – it worked well enough, cost no more by trying increase the rate, and was fast enough to avoid flickering. |

|

|

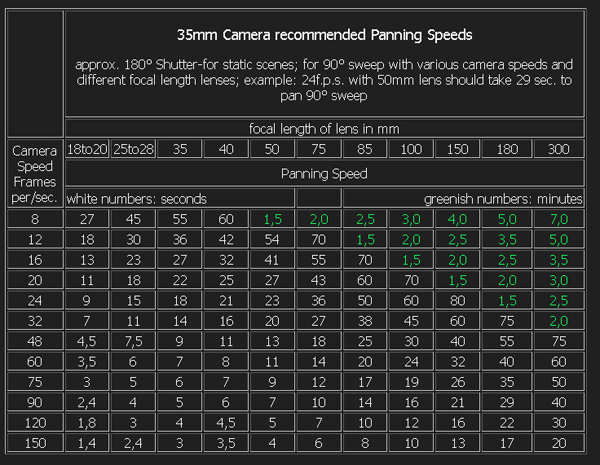

However, David pointed out that in other respects, 24 fps has not continued to be sufficient as motion picture production developed, such as producing material for very large screens or capturing fast moving content. As a result, cinematography manuals are full of tables listing optimum panning speeds for shooting 24 fps on different cameras. On the other hand, while he feels that an attachment to the look of 24 fps is hard to justify on its own, he also does not advocate gratuitously raising the frames rate if there is no compelling reason for it in the context of the movie. “In some cases it will have more of an impact than in others. As a creative tool it has to be properly understood,” David explained. “If a director were going to make a high action film and felt it was important for the viewer to see every detail of the action, then HFR would contribute effectively to that director’s vision. He might also want to use the judder and strobe resulting from slower rates to hide something. Frame rate should become an option that DPs will consider more frequently as they plan shots. I certainly include high frame rate techniques for cinematographers in the courses I teach at the Global Cinematography Institute.” Catching Up with HFRHe reflected that using higher frame rates as a regular option is mainly a matter of catching up and refining the storage and monitoring hardware to deal with on set procedures. “Regarding light, some people have commented that footage in ‘The Hobbit’ reveals too much detail, which interferes with the storytelling aspect of the film. But DPs can learn how to light effectively to control the amount of detail across their shots, and both cinematographers and directors can learn how to decide at the DI and colour correction stages what to highlight or what not to show.” |

|

|

Frame integration is a way to derive various frame rate versions from a sequences shot at a high rate. Each version would alter how the motion is rendered and the aesthetics of the images. You might shoot footage at 120fps and extract a lower rate version by sorting frames - for example, discard five frames and keep a single short shutter angle frame – or by integrating the five frames to create one 360° shutter version of the image. “In terms of post production, high frame rate material can actually make tracking and some other visual effects and compositing tasks easier due to the increased resolution – except, of course, for the fact that teams now have two or more times as many frames to deal with! Nevertheless, high frame rates increase apparent resolution more profoundly than through pixel resolution,” David said. Mechanical ConstraintsWhile spatial resolution - how closely lines can be resolved in an image - has always been available to cinematographers because of the original decisions about film manufacture, they have not always had enough temporal resolution – control over the time required to acquire a single frame during capture, revealing motion – because of those early decisions that Warner Brothers and Western Electric made back at the time of sound synchronisation. |

|

|

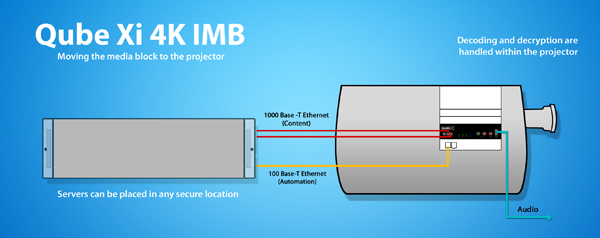

“Thomas Edison explained that the kinetograph was hand cranked at 46 fps, and said he only believed that this was almost sufficient for viewers,” said David. “Also, because of the movement he had developed for it, the frame was exposed for nine tenths of the time and shuttered for one tenth of the time. The physical, mechanical constraints following on from shuttered camera design, which pulled down and covered the film as each new frame was pulled to the aperture, resulted in a 50 per cent duty cycle – 50 per cent open and 50 per cent shut while positioning the new frame. “These constraints remained in place until digital photography developed. However because digital cameras transcend this constraint, cinematographers can keep the shutter open 99.9 per cent of the time if they want to, although they still may be uneasy with the look of the 360° shutter image.” The Hobbit - New Zealand PremiereRajesh Ramachandran is chief technology officer at Qube Cinema, the company that provided the high frame rate server system for the world premiere of ‘The Hobbit: An Unexpected Journey’ on the Titan XC screen at Reading Courtenay theatre in Wellington, NZ on 28 November 2012. The Qube equipment was also used for the London premiere at the Empire Leicester Square on 12 December. The Qube XP-I server streamed a high bit rate DCP at 48 fps stereoscopic 3D to two Qube Xi Integrated Media Blocks, or IMBs. Rajesh explained some of the challenges relating to the premiere and to HFR projection more generally. “The key components of 3D HFR exhibition are the DCP content, the server, the integrated media block and the projector. We need to be able to stream 48 fps - or higher - DCP content at high bit rates from a server to an IMB housed in a projector,” he said. “We also have to be sure that the 3D system used in the theatre is capable of supporting HFR.” |

|

|

“To get to 48 fps in 3D, we needed Series 2 projectors, which first came on line in early 2010. Stereo HFR data can be shown using one or two projectors. The two-projector setup is preferable since it doubles the brightness, which is essential for exhibition on larger screens. content. ‘The Hobbit’ can be presented in 3D HFR through a single IMB and, in fact, most screens showing 3D HFR use a single IMB in one projector, but low light levels remain a challenge.” Brighter ProjectionRajesh said, “With this showing of ‘The Hobbit’, dual projectors were used to create a 3D screening that was as bright as 2D screenings owing to dual IMBs and dual projectors. Usually the brightness is about 4.5 foot-lamberts. At a recent screening of ‘The Hobbit’ in 3D HFR in Chennai, India, we used a dual projector high-brightness configuration, with the single server and dual IMBs, to achieve 14 foot-lamberts, the standard level of illumination for 2D films.” The older servers and Series 1 projectors, with their HD-SDI connections, could not have supported the bandwidth necessary for HFR at all. The new IMBs, using a Texas Instruments interface, can deliver HFR uncompressed content to the Series 2 projectors in which they are imbedded. The Qube Xi IMB, for example, can deliver up to 4K at 30 fps or 2K at 120 fps to the projector. The DCP data is streamed from the server to the IMBs at high bit rates. Although a single Qube XP-I server is capable of streaming up to 1 Gbps of uncompressed data through a dual Gigabit Ethernet interface to two Qube Xi IMBs, sometimes server/IMB setups require multiple servers to exhibit 3D HFR. “In terms of content, going to 48 fps means that we have to almost double the bit rate to get the same level of compression as for non-HFR material,” Rajesh said. “For example, the bit rate that was chosen for ‘The Hobbit: An Unexpected Journey’ was 450 Mbps. So cinema server manufacturers whose equipment is used to show ‘The Hobbit’ had to ensure that our server system and storage could sustain the bandwidth of 450 Mbps for the picture, sound and auxiliary content.” Synched IMBs are a benefit because they allow for multiple projector playback. In the case of 3D this means dual projector playback. One projector shows left-eye content, one shows right. This doubles the brightness by displaying both eyes simultaneously and constantly, as opposed to a single projector continuously flipping back and forth between left-eye and right-eye content. Consequently 3D motion overall improves by making it easier on the eye and brain to process, and is also closer to how the human eye actually sees. Cinema ExperienceThe frame rate for content captured at higher frame rates will, at least in the beginning, need to be reduced for some distribution and display platforms. “At this stage in development, you need separate DCPs for each frame rate. So, for ‘The Hobbit’, there are two separate DCPs, one at 24 fps and one at 48 fps,” said Rajesh. “Theoretically, it is possible to do the downgrade on the fly, but a filmmaker would want to see and control how it will look and probably question manipulation at the theatre level.” Considering the role that projection plays in bringing HFR productions to viewers, in a manner that for now can only be experienced in a cinema, Rajesh feels that higher frame rates alone don’t provide a differentiated experience for cinema goers. “There are TVs that run at 120 fps or more. The point is that there isn’t 120 fps content for home viewing. All of these TVs are synthesizing frames in between by interpolating data of subsequent frames, to provide the users with a higher frame rate presentation,” he said. “While there has been great debate about the aesthetics of 3D HFR, between those who like it and others who do not, everyone agrees it offers a more immersive experience. It also provides another creative tool for filmmakers. Cinema in turn brings a larger presentation, with higher resolution, because it contains more temporal information. That’s its selling point. www.qubecinema.com |

| Words: Adriene Hurst |