‘Independence Day’ Resurges with Courageous VFX

When audiences rejoin the INDEPENDENCE DAY story in ‘Resurgence’, countries around the world are at work on an immense defense program to protect the planet against the return of the alien invaders they drove out 20 years earlier. They try using recovered alien machinery and engineering to secure the population but soon realize that technical innovation alone won’t be enough to protect the Earth from the aliens’ renewed force.

Like the story, this movie’s numerous, ambitious visual effects shots were a global mission with contributions from about a dozen vendors. In this article are MPC, who started on the project during pre-production to create the opening shot and first sequences. Cinesite produced battle scenes with the enormous alien Mother ship out over Area 51, and started much later into the project when production VFX supervisor Volker Engel and director Roland Emmerich had a very clear vision of the movie in their minds. Ncam’s camera tracking system was used throughout production and into post, to monitor the shoot in terms of their plans for VFX production and give the vendors a head start.

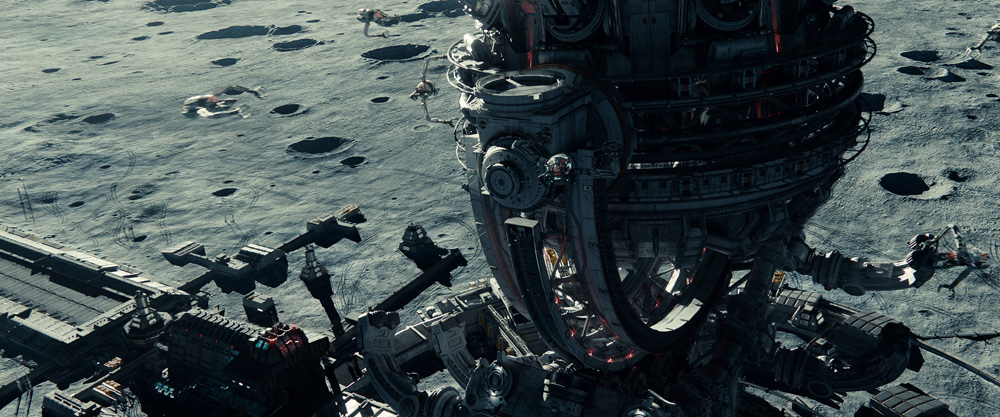

MPC on the Moon

VFX Supervisor Sue Rowe, CG Supervisor Chris Downs and 2D Supervisor Sean Konrad led MPC’s VFX team, and worked with Volker Engel to create more than 200 shots. MPC opens the film with a sequence taking place on the Moon, beginning with the arrival of a new weapon at the Earth Space Defense Station. “MPC joined the shoot fairly early on since we had the first shot and our sequences were some of the first in the film,” said Chris Downs.

MPC built the moon itself, which includes two main lunar environments, the Sea of Tranquility and the Van de Graff Crater. For this, the team researched NASA photography, and used production concept art and Hubble references for views from the Moon to Earth. The team studied the properties of the moon’s surface to ensure the simulations were geologically representative. This also helped make the destruction of the organic surfaces look photoreal.

“For Volker, matching the qualities of the moon surface was critical to the look of these shots,” Chris said. “Equally important was to come up with a look for the engineering, weaponry, buildings and vehicles that referenced those in the original film, but with 20 years of human development. The production was very keen to find a look for the FX that bridged our own gaps in visual effects technology between then and now, while retaining the feel of the original film.”

Tugs, Buggies and Moon Dust

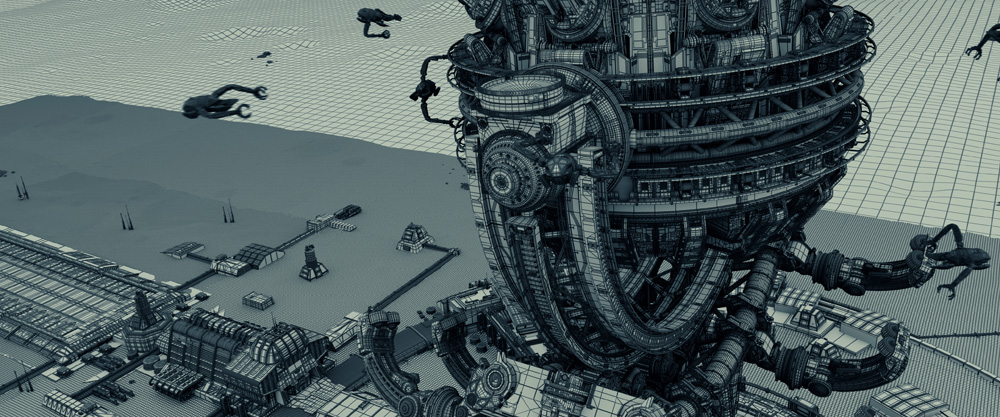

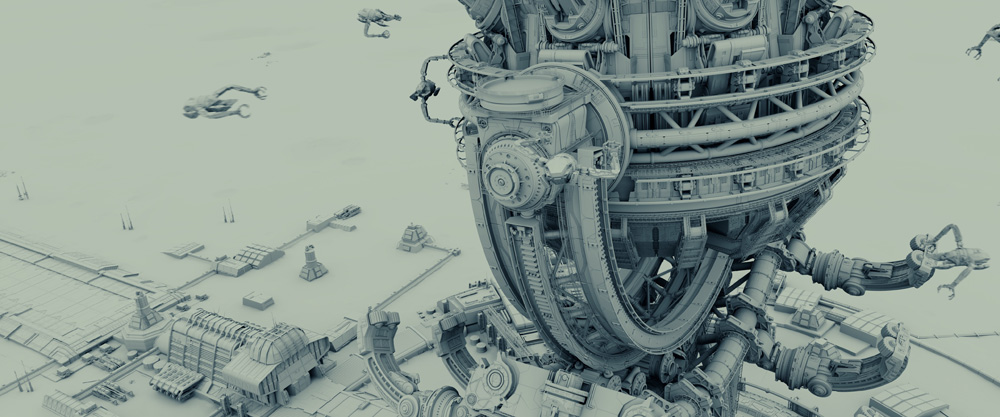

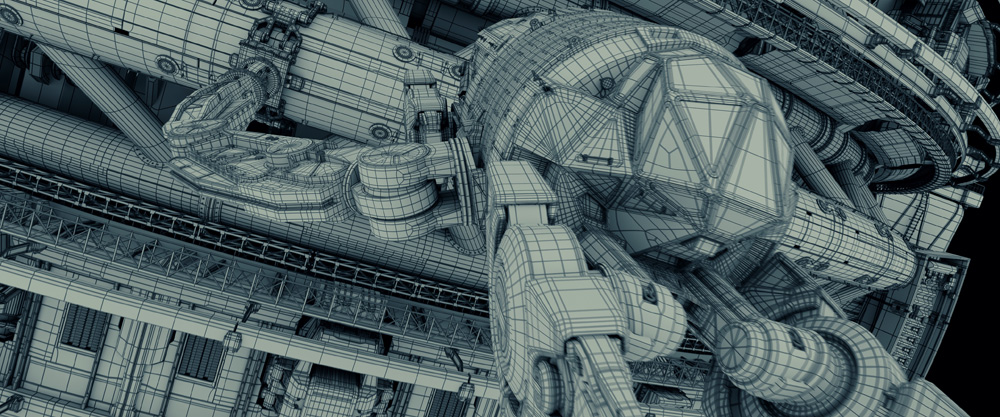

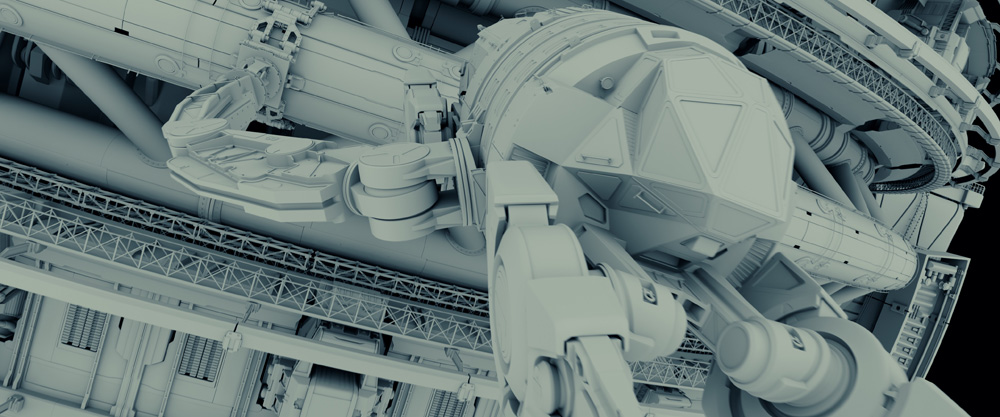

The film’s production team supplied concepts of the cannon on the Moon Base, and MPC built the 300m tower plus hundreds of other assets to the surface including the smaller buildings, CG lunar vehicles including the moon tug, moon buggies, fighter Jets, digital doubles of the two main characters and the moon cannon. Fine details such as lunar dust particles, flashing landing lights, tire tracks and footprints were added to the powdery surface.

The most noticeable feature of all of these assets is extreme detail. Small working parts, fixtures and controls are everywhere, and the hard light from space makes all of these items pop and look beautiful. Chris said, “The concept art was a great starting place, but Volker and Roland were both very enthusiastic about expanding upon the concepts and within the constraints of the overall design, so we had a good amount of room with which to selectively add details to make the assets shine.”

Albuquerque

Visual effects supervisor Sue Rowe was on set for much of the original shoot in Albuquerque, mainly involved in ensuring that the blue screens and tracking markers would all give adequate coverage for their needs based on the scope of work. Meanwhile, set and texture reference images and LIDAR scans were all gathered by the client-side team and sent to MPC Vancouver for use in building their digital assets.

The large number of vendors involved on the film, working simultaneously, required the teams to share assets. “In many ways we were fortunate in that our scope of work was fairly self-contained as a group of shots in one location,” Chris remarked. “Even so, we were in continuous contact with the vendors we were sharing work with. For example, we partnered with Scanline, sharing their Mother ship and our moon tug. There came a time when our camera angles meant we needed to selectively increase the model and textures resolution in areas where Scanline hadn’t needed coverage.”

As the characters investigate an alien crash site, the gigantic Mother ship appears, ploughing into the moon’s surface and creating a meteor storm of debris and lunar soil that heads directly at them. Live action plates were shot in a studio against blue screen, with partial set builds for the lunar surface. The actors were on wires to simulate the differences in gravity. As the destruction starts, the actors are replaced with full CG digital doubles of the characters in their space suits, created using detailed scans and photographs of the actors. On some of the closer shots the camera was approximately 6 to 8 feet away from the doubles.

Lunar Physics

“By using some of the public NASA and Hubble data, we were able to accurately model the physics on the moon, and we could also work out a rough speed for the Mothership based on some of our wider shots,” said Chris. “Doing it this way gave us some fairly physically accurate results from our simulations to base the shot on, but often we found they were actually too static. In those cases Volker and Roland would give us the creative license to punch up shots and add wow factor.

“The simulations were done in a variety of software - Flowline, Houdini and our proprietary Kali destruction system were all heavily used. The meteor storm shots were slightly different, done with a blend of both key framed animation and instanced geometry, which was run through a simulation. This allowed the storm to look massive while also giving us the flexibility to include some hero animation we could art direct.

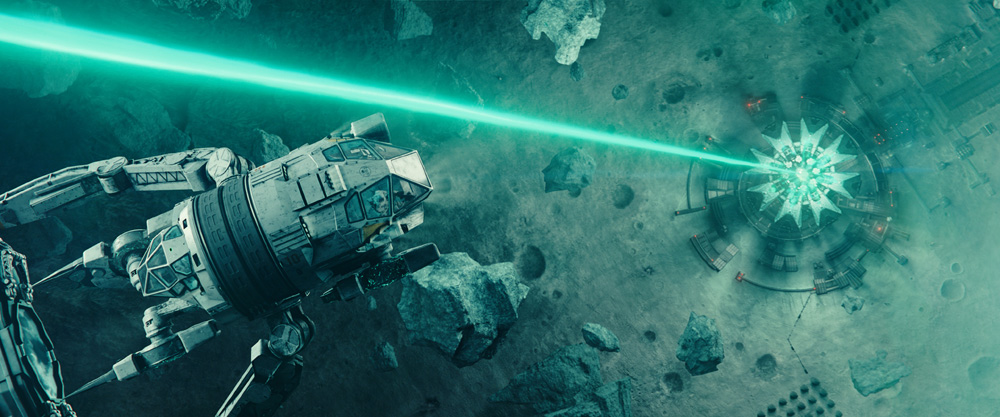

Ultimately the Moon Cannon proves no defence against the huge Alien cannon and the Mothership’s Beam demolishes the Moon base with its green laser, one of the most recognisable looks from the first movie. MPC’s FX team used RealFlow and Houdini to recreate the famous green beam. Chris said, “An important part of the briefs for our sequences was making sure we didn't stray too far from the look and feel of the effects in the original. We were given a bit of leeway to update some particular FX - however the beams were a signature look we didn't make many changes to.” www.moving-picture.com/film

Ncam Camera Tracking

Ncam tracking systems were used across all stages of making ‘Independence Day’, from pre-production through post. At the outset during camera preparation, all camera unit lenses were calibrated ahead of time. Ncam was used for real-time compositing throughout the shoot and afterwards, all camera tracking and metadata gathered during the shoot, along with the temporary composites and raw outputs, were taken into post to help finesse and finalise the end results.

Digital Media World spoke to Kingsley Cook from Ncam about the project. Kingsley works on Ncam’s business development and, after working as a VFX coordinator on a number of major feature films over the past 9 or 10 years, he is accustomed to looking at how VFX creation fits into the wider film production process.

“Having already adopted the Ncam system for ‘White House Down’, Roland Emmerich knew the difference virtual production can make to shooting a heavy VFX feature,” he said. “By visualising the intended result through the eye-piece of his cameras in real time, he could frame complex shots more accurately and sign off in advance of post production. Because this cuts out lengthy shot iterations, it improves the post workflow overall.”

Across all of the major sequences, Ncam was used on about 90 per cent of the movie’s 1,750 VFX shots. Consequently, three systems were supplied, and most of the time, two of these were working simultaneously on the main unit’s A and B cameras.

Small Footprint

Ncam sensors, positioned on board the camera, survey the set and locate all features in 3D space – including the camera. Therefore the system can follow and track camera moves and motion and, within the viewfinder, accurately track digital elements into position for the DP or director to watch as the shoot proceeds. Elements may include animated characters, props and set extensions. Having those elements in view as a temporary composite makes it easier to compose shots and design camera moves. It also alerts VFX supervisors to what data they will need to capture from the shoot for post.

Ncam has global partners that help deploy systems and provide services for customers. On this project, their partner Cineverse in Los Angeles took on the role, typically supplying a two-man team with an operator and an assistant. “Ncam's footprint on set is small and unobtrusive,” Kingsley said. “All the equipment fits in one upright cart that houses the tracking server. The operator mans the cart while the assistant works camera-side, relaying information such as lens and body changes, and what rig will be used for the next setup.”

The Ncam server outputs the composite of live action and VFX pre-visualisation back to the camera's eyepiece and to video assist. The same composite is, in turn, distributed to any monitor on the floor. That way, not only does the cameraman see the outcome of his work, but the entire production has a visual reference and therefore a better understanding of what the director is trying to achieve for each setup.

Ncam On Set

Kingsley said, “VFX sequences in movies like this tend to be complex, lengthy and shot across a variety of different locations, and that made the Ncam system a good choice for the production. It doesn’t rely on certain conditions – it can work anywhere, indoors or outdoors, in daylight, night, fog, rain and so on. It doesn’t cause delays to the schedule because calibration is very quick, and the cinematographer is still able to see the end results wherever the production decides to shoot. That is true whether the shot is an animation or needs set extension.

“Virtual production also requires buy-in from all departments. There is a learning curve to the on-set workflow, which everyone from the ADs to the director has to acclimatise to. It does require understanding and patience but, once embraced, the advantages of virtual production can be extracted.”

It was essential for Ncam’s operatives to strike a good working relationship with the entire crew. Much time was spent on training, working with all departments but specifically with the VFX team creating the CG content that was going to be tracked into place. “Clear communication with the artists was critical, from preparing and conditioning the content before and during the shoot to on-set workflows where the alignment of virtual environments to real-world sets and the triggering of animated characters for eye-line and interaction were central to bringing Roland's vision to life,” said Kingsley. www.ncam-tech.com

Cinesite Strikes Back

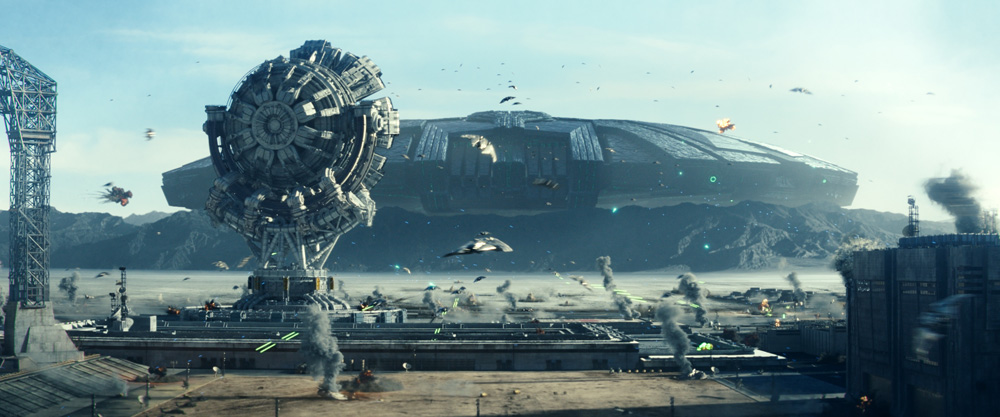

In contrast to MPC’s moon shots, Cinesite’s work was earthbound, mainly devoted to the dog fights between allied and alien fighters, and explosions and destruction across the Area 51 environment. Many of their shots involved the alien Mother ship.

“Discussions on our shots began as far back as January 2015 but we did not start work until July. To get our team up to speed we hired extra animators, and divided the work between the London and Montreal facilities,” said Cinesite’s VFX supervisor Holger Voss. “In several instances, we needed to create entirely CG shots and we replaced lots of blue screens from studio-shot footage of dialogue sequences on the ground.

“We were sharing many of our assets and sequences with other facilities, including Weta, MPC and Uncharted Territory, and in turn brought their assets into our pipeline and often did quite a bit of work on them. Although they were initially created at other studios, they had been finished to varying degrees of completion. So we set up a specific, identical pipeline between the London and Montreal facilities for receiving and updating assets, bringing them up to the quality needed, and also worked on ways to automate this pipeline.” The modelling was done in Maya, with Mudbox for displacements, and the texturing with Mari and Photoshop

Improving on Previs

Cinesite’s team found that plenty of previs had been prepared, though not necessarily showing exactly what the director wanted to see. For example, when beginning on the dog fight sequences, they were shown previs but the director wanted to see more fighters, filling the screen. As they worked on this they would supply their shots with motion blur for the production to look at.

“We worked with the production through cineSync,” Holger said. “We really enjoyed the sessions with Roland Emmerich, who by the time we got involved could give very clear directions about what he liked and wanted to emphasize.”

When they received the Mother ship asset, it was still only a basic design and model, which the team then built up into one of the best known and most formidable assets of the movie. “Our build had to take into account the ship’s ultimate destruction, which involved separate simulations to break down different sections of it,” he said.

Mother Ship

“The shots we created required additional look development and procedural shading as well as linear displacement and digital matte painting to enhance the surface detail of the enormous 5km scale. The surface design was very much based on references from the first movie. We tried to match the old city destroyer surface as closely as possible, but give it its own signature. We added more round shapes. The pictures from Alien Fighter models from the first movie were a very useful reference.”

Working with the Mother ship was also interesting from a storytelling angle. Its role in the movie continued to change until only a few weeks before delivery, demanding more revisions to the animation. “At some point the story shifts, and the alien Queen becomes aware that the ex-President is attempting to come on board with a bomb. Consequently, the sequence needed more fighters, attacking and trying to prevent the human ship from entering the Mother ship. Mmeanwhile underneath it, critical story action takes place as the heroes try to break in.”

Area 51 Dynamic Damage

Creating the Area 51 environments employed a combination of photography and digital matte paintings, stitched together as a cyclorama. The area was supplied from Weta Digital as a basic layout only. It was up to each vendor to complete it to work with their part of the story. Cinesite designed the desert scenes mainly by themselves, while some environments were based on previs that had been prepared for use when viewing real-time composites on set, using Ncam. They used their asset pipeline and created extra digital matte painting work using Photoshop and Nuke.

Holger said, “During the aerial battle sequence, Area 51 is heavily damaged. FX smoke plumes and explosions were laid out to create a nice dynamic throughout each shot. As the battle progresses, so does the damage to the geography. We created an average of four different destruction versions for each building type, which were selected in our layout stage. To increase the variety even further, we used projected DMPs to add more unique destruction and to enhance the detail further.”

Completing the very wide, aerial shots over Area 51 was especially challenging. Filling them with so many explosions was difficult to do in the time allowed. He said, “We created a library of custom, original simulations specifically for this film, using Maya and Houdini - but then we had to compose the shots with so many of them, taking up a lot of screen space.” www.cinesite.com