Mapping a New Face with CONNECTED COLORS

In early 2016, visual design studio WOW in Tokyo produced ‘Connected Colors’, a project combining animated 3D graphics, projection mapping and live performance. The video was made for a global Intel promotion titled ‘Experience Amazing’. Intel requested innovators from around the world to create artworks for the campaign, and selected artist Nobumichi Asai to create a face mapping project.

While Asai took the role of creative director and technical director, WOW worked as the production company for the live action and CG animations. “Face mapping is a new technique of projecting moving images onto a living face, incorporating and using the natural expressions and expressiveness of the face as part of the final result, similar to the art of makeup, which has millenniums of history,” he said.

“Each project in Intel’s promotion was to have a different theme. The theme of ‘Connected Colors’ was inspired by the connected world and Intel's concept of the ‘Internet of Things’, or IoT. The connected world has two sides - a positive side that brings efficiency, optimization, harmony and symbiosis to people’s lives, and another side recognises the need for operational rules and administration. I wanted the message for this project to question the use of technology, and decided to create an artwork expressing the positive side of connections.

The Precision Connection

“When we focus technology on life, we see that animals, plants and insects are all based on the same structures – on DNA – and all have different colours. I used the motif of the colours that all forms of life have in nature, including humans, and connected those colours.”

Previous to the Intel project, Asai had worked with projection mapping and virtual reality on his own. His first venture into face mapping was a private project titled ‘OMOTE’, and later he did some face mapping for Japanese TV programming and music videos.

What was different about this project was the level of precision it demanded from their tracking and projection. Lack of precision would affect latency alignment and colour reproduction, causing the audience to feel disconnected. Therefore Asai, WOW’s CG artist Shingo Abe and his team made substantial improvements to the latency, alignment and colour systems within their production and projection set-ups, giving them much better results compared to Asai’s previous projects.

As Intel explains it, “With Intel Core i7 processors inside his computers and light projector, Asai turns the human face into a revolutionary canvas. The 6th generation Intel Core i7 processors deliver a new class of computing and is pushing the boundaries with overclocking for the most demanding special effects needs.”

Asai and Abe selected their hardware carefully. Asai said, “For tracking we used five OptiTrack motion capture cameras, which capture at 240fps, and Intel PCs. For projection we had a Panasonic projector, looking for the lowest latency, highest resolution and widest dynamic range we could achieve.

Cinema 4D/After Effects Workflow

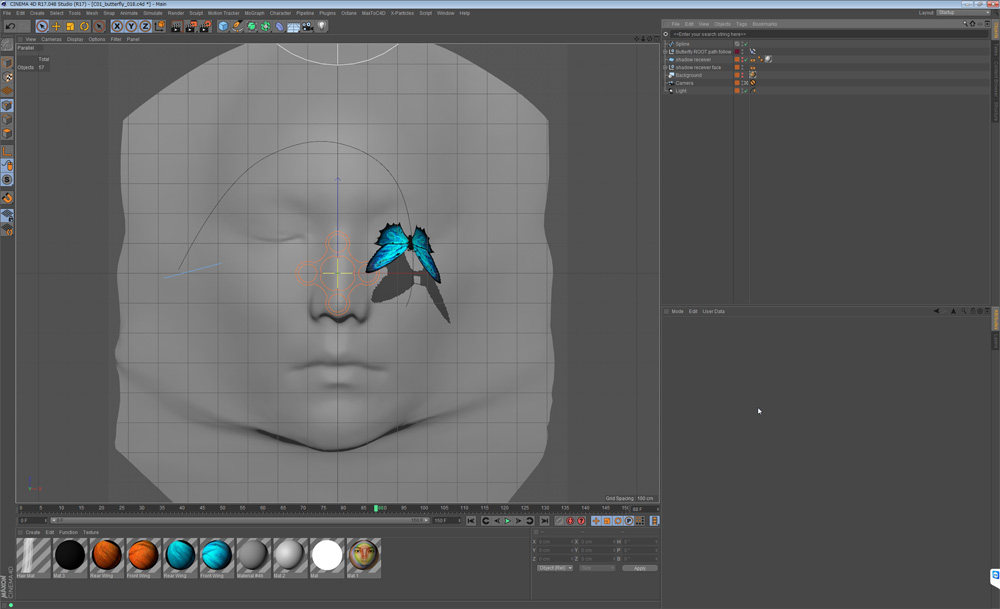

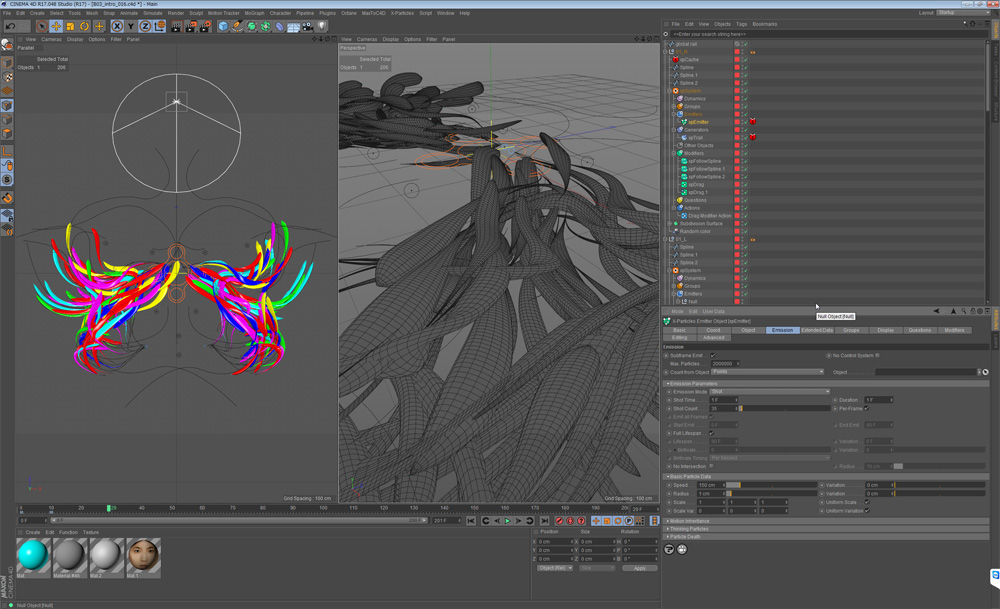

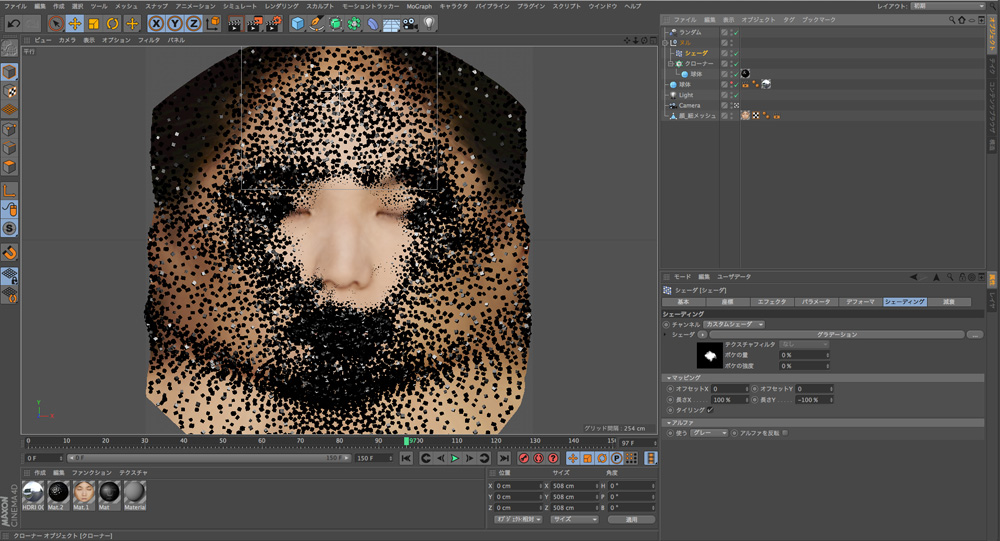

Abe said, “For the animations and elements of the animated textures I used Cinema 4D, compositing in After Effects, which is a very fast and natural workflow for motion graphics. I could produce many iterations very quickly, giving me time to get closer to the result and quality I was looking for. MoGraph also has great tools for this kind of project. I use After Effects and Cinema 4D for most of my work, combined with Photoshop and Illustrator.”

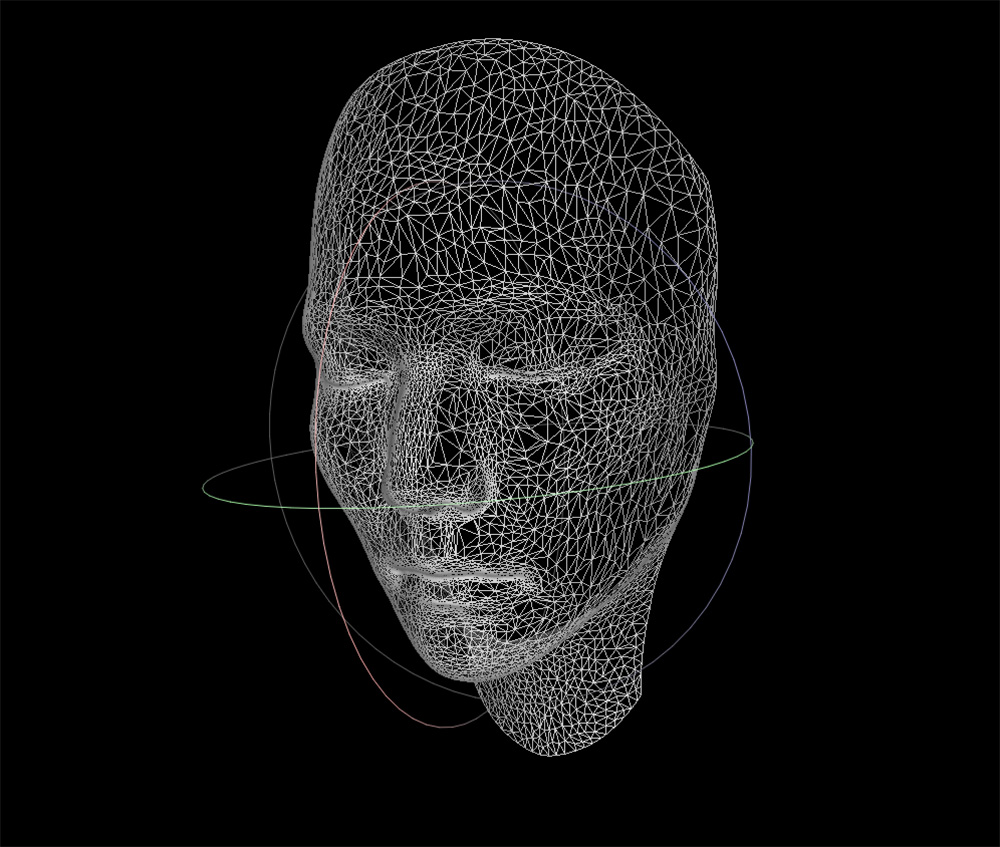

First, the artists took a 3D scan of the performer’s face, unwrapped the UVW and captured the texture of the face, and then imported the geometry and the texture into Cinema 4D. After building a 3D model of the face, they created the animations to fit to the UV of this model, and then applied the animation as a texture. This was done for each animation, and these 3D models then became the projection source, or generator.”

At performance time, the position and rotation of the performer’s face were calculated from the tracking data in real time, and we synchronized the position and rotation of the 3D model with it. Thus, an accurate, perspective view of the 3D model’s animated textures was projected onto the real face.

Abe said, “Cinema 4D’s speed was important because this was the first face mapping project we have done at WoW. The whole project had to be completed in six months, but because it took us some time to get our ideas and approaches into shape we only had one month to spend on production work. The speed of Cinema 4D really helped us.

Harmony

“One of the first and most important aspects of face mapping we discovered was how sensitive viewers are to even slight changes to the face that are incongruous or out of harmony with the performance. Compared to small alterations made to landscapes or product shots, for instance, audience reactions to faces are much stronger. So we had to do a lot of testing and work rapidly through many trials.”

Cinema 4D’s MoGraph tools were especially useful in three of the animations. The first is the scanning sequence at the start of the video. Later on we see a transition from the model’s skin to a black-and-yellow lizard’s skin, and a few seconds later a black-and-red frog’s skin forms and slides off the face. 12sec, 50sec, 1.03sec

MoGraph’s Inheritance Effector was used in the scanning sequence to transition a composited wireframe as a 3D effect as it moves over the face, and then spreads out from the nose. When this was rendered with the Cel Renderer as a post effect, it created a complex pattern.

For the lizard’s skin, the Shader Effector forced a gradual change across the texture of the face’s surface. The Random Effector was used to produce the randomized, fractured black-and-red pieces of the frog skin, while the slide-off effect was created using Dynamics, which have parameters that can be set through the Effectors.

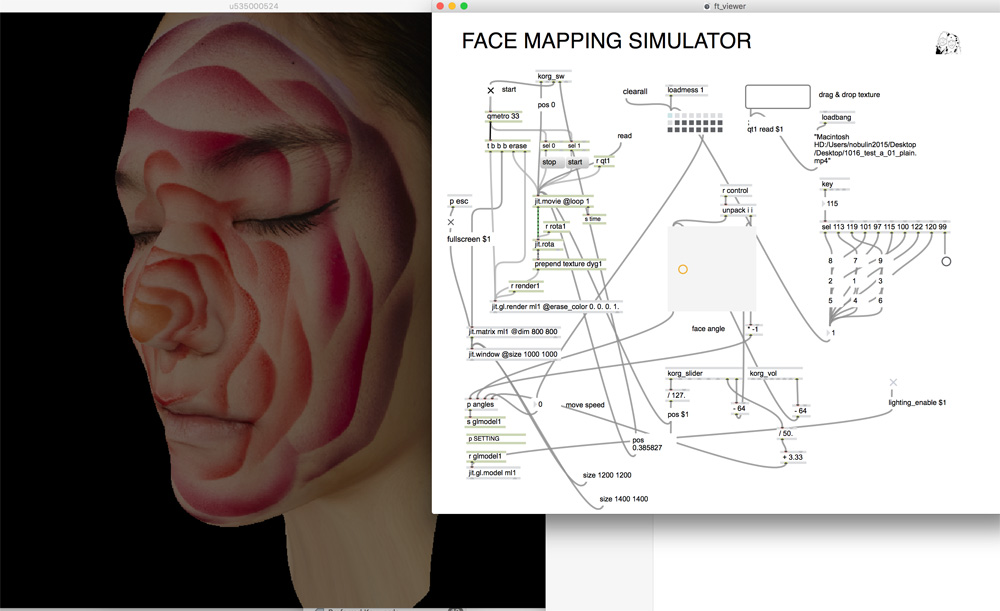

Original Software

Once all of these animations, looks and effects were completed as WOW wanted them to be, precise projection data was generated using original software the team developed for this purpose. Interestingly, although this production gives you an impression of interactivity and sponteneity, the actual projected movie is fixed. Only the projection, driven by the motion capture, was interactive. This made it possible to storyboard the piece in a deliberate way.

At times, the model appears to blink and open up her eyes to reveal an animal’s eyes, or to suddenly smile. In those cases, Abe explained, she did not actually blink in real time. Viewers are watching a projected animation - only. “We have done a lot of research and created numerous simulations for eye expressions, adjusting them over and over,” he said. “People can immediately sense even the smallest incongruity around the eyes. In daily life, they watch other people’s eyes and, from there, read slight changes in feelings. To test those projections, we used a mockup of the scanned face model, Cinema 4D and other software until we could finally create very natural blinks and smiles.”

Asai commented, “Modifying details of the face is very sensitive work. It is fairly easy to express humour or fear, or achieve grotesque results. Conversely, beautiful expressions on faces are difficult.” www.maxon.net

www.w0w.co.jp

www.nobumichiasai.com