AMD integrates a dedicated AI engine into its Ryzen processors, resulting in NPU architecture ready for natural language processing, real-time translation, video upscaling and generative content creation.

Reasons and opportunities to incorporate artificial intelligence into our working and personal lives are mounting up, leading to some pressure to replace your current laptop with an AI-powered PC. It’s important and interesting to understand the components required to produce an AI PC, and how they work together to accelerate AI-related tasks.

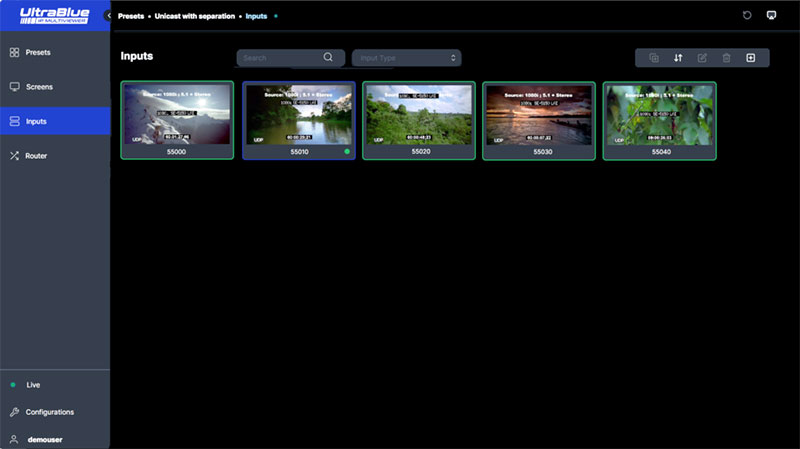

We recently took the opportunity to look into AMD’s development of their new Ryzen AI Processors, and how they function in the hardware environment of a PC or workstation. AMD has integrated a dedicated AI engine into its Ryzen processors, making use of AI-specific hardware blocks for tasks such as inferencing and real-time AI computation. The resulting NPU (neural processing unit) architecture, AMD Ryzen AI, is designed to offload AI workloads from the CPU and GPU, improving overall system performance and efficiency.

With this specialisation, Ryzen AI powered computers generally need less power to handle AI tasks than those relying on conventional CPU or GPU processing. They can support related applications such as natural language processing, real-time translation, selective video upscaling and AI-driven or generative content creation. A further capability is the ability to adapt to the user’s workload dynamically, optimising AI processing in real time.

Processor Optimisation

AMD Ryzen AI PRO 300 Series processors were released in October 2024, and are built on AMD Zen 5 architecture, resulting in very high CPU performance. They are tuned for use in enterprise PCs running Microsoft Copilot+. But, by adding XDNA 2 architecture to run the integrated NPU, the Ryzen AI PRO 300 Series processors can achieve more than 50 NPU TOPS (Trillions of Operations Per Second) of AI processing power, exceeding the requirements of Microsoft Copilot+ PCs and delivering competitive AI compute and functionality. Built on a 4nm process, with custom power management, the new processors also help to extend battery life.

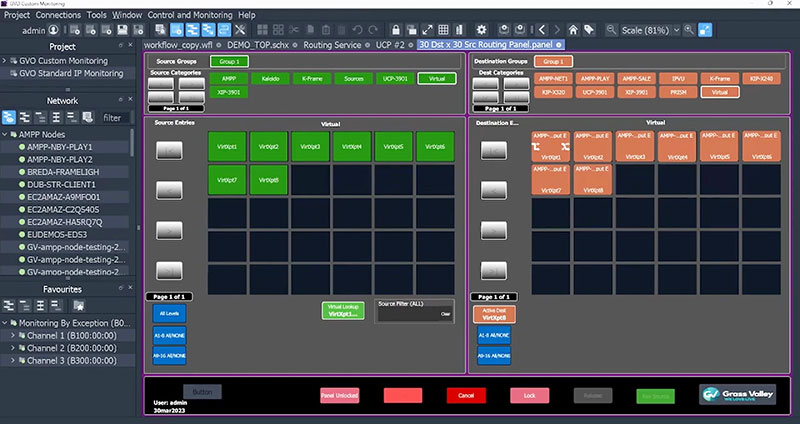

OEMs including Lenovo, HP, Asus, Dell and others have so far delivered Ryzen AI PCs, engineered for tasks such as generative AI, 3D modelling and animation, and machine learning development. To do this, when designing their own AI workstations, HP includes ISV certifications and professional grade components with the ability to handle massive datasets and complex models, tested for performance.

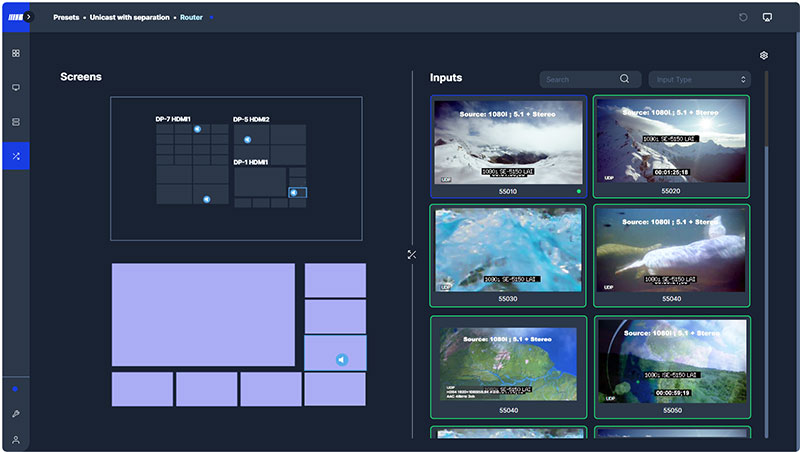

The GPUs are optimised for parallel processing, making them suitable for graphics rendering, large-scale model operations and training large language models (LLMs). The CPU looks after smaller-scale model operations, sequential tasks and overall system management. As described above, the NPU or AI engine is designed specifically for neural network tasks, suitable for model training and optimised for efficient inference.

On-Device AI Acceleration and Processing

As NPUs are purpose-built to efficiently accelerate AI inference, and since many AI-accelerated content creation tools can also be accelerated on the GPU, the user and the CPU are left to continue working on non-AI-accelerated aspects of the workflow. AMD believes that being able to run AI applications locally on workstations, instead of relaying data and information from the end-user’s PC to a remote or cloud-based 3rd party service, has certain advantages.

First, it keeps sensitive IP and content within users’ control, reducing risks of unauthorized access. Local processing also limits the need for cloud services and data transfer fees, and can result in better performance due to real-time processing.

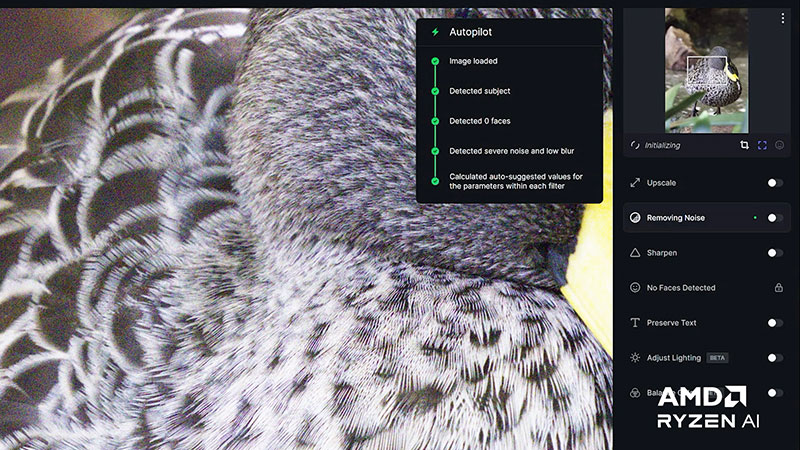

An example is AMD’s partnership with Topaz Labs, developers of software that content creators use to use AI to enhance photos and videos. It can de-noise, sharpen, correct white balance and change exposure in photography, and upscale, de-interlace, slow frame rates and stabilize video. Because the software runs on standard hardware, performance is very important to their users.

Their partnership with AMD means that, when combined with Ryzen AI, Topaz users can run AI workloads locally and at speed, without relying on a cloud service or sharing data out, gaining efficiency and speed advantages. The partnership also means Topaz can support newer generation hardware.

TOPS – Doing Everything Faster

Operating an NPU at an entry-level TOPS makes it suitable for efficient real-time processing. AI PCs generally maintain around 40 NPU TOPS to handle productivity tasks – running local generative AI, exploration of design concepts or data analysis, initial model construction and running AI inference models.

However, HP notes that the cumulative parallel processing power of the NPUs and the high-TOPS discrete GPUs in Z by HP Workstations ranges from 120 to 5,828 TOPS, making it possible to significantly accelerate training, larger model development and working through complex AI challenges. The higher-specification ZBooks and HP desktops can take on more demanding local generative AI, large AI inference models, complex data analysis and visualisation, large-scale model development and training, and LLM development.

Meanwhile, memory is temporarily storing data for quick access during computations by the CPU, GPU and NPU. The more memory available, the faster and more efficient the computation – AI or otherwise – and by expanding storage, users can scale as their projects grow.

One of HP’s new AI PCs is the OmniBook Ultra 14 inch Laptop that uses the AMD Ryzen AI 300 series processors. As a professional model, it features up to 5.0 GHz maximum boost clock speed and 24 MB L3 cache with 10 cores and 20 threads, AMD Radeon 880M Graphics, and two Thunderbolt 4 Ports with USB Type-C and Type-A ports. However, due to Ryzen AI, it can also handle AI processing at up to 55 TOPS, supported by 32 GB LPDDR5X (Low-power double data rate 5X memory) and 1 TB SSD storage.

On the everyday side, it includes HP AI Companion, and Poly Camera Pro and Poly Studio for customising the camera and speakers for collaboration applications. Its AI-enabled camera is also capable of safety features such as Lock on Leave, Wake on Approach, Adaptive Dimming, Screen Time and Screen Distance reminders. www.amd.com