Moments Lab’s new API enables engineers to natively integrate AI understanding of video with any software to generate humanlike descriptions of content.

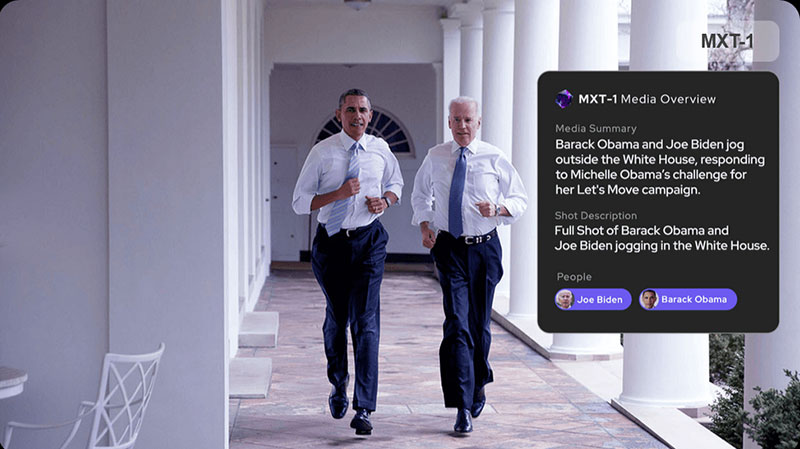

Moments Lab, a company specialising in AI for video understanding and search, is releasing its public API, allowing organizations to enrich their media assets with effective metadata that can be integrated into many types of software. Moments Lab’s AI indexing system MXT generates time-coded, searchable metadata on media files, capturing what's happening in every moment of a video and describing it in human terms. It is capable of identifying people, logos, shot types, landmarks and speech, and determining the most impactful quotes in a transcript.

The new API enables organizations to better discover their content and gain statistical insights to generate and define a wide range of requirements, including content search, insights generation, curation and recommendations.

“When we first released MXT, many people asked us how they could embed our video understanding technology within their own product, and utilise the data to boost content discoverability or serve other use cases,” said Frederic Petitpont, Moments Lab co-founder and CTO. “Our API is here to enable them to do that, uncovering and enhancing searchability, contextual insights and new ROI opportunities.”

Organisations make their files available to Moments Lab, where they’re temporarily stored and analyzed by MXT, before the generated metadata is sent to an existing DAM, MAM, CMS or other software of the user’s choice.

Metadata generated by other AI indexing tools is typically delivered in formats that are difficult to read or cannot be edited, leaving no opportunity for evaluation. MXT produces readable, text-based metadata that is fully editable within the Moments Lab platform before it is sent to existing software, ensuring portability, accuracy and transparency.

Developers can access all relevant documentation at the MXT API developer website, api.momentslab.com. www.momentslab.com