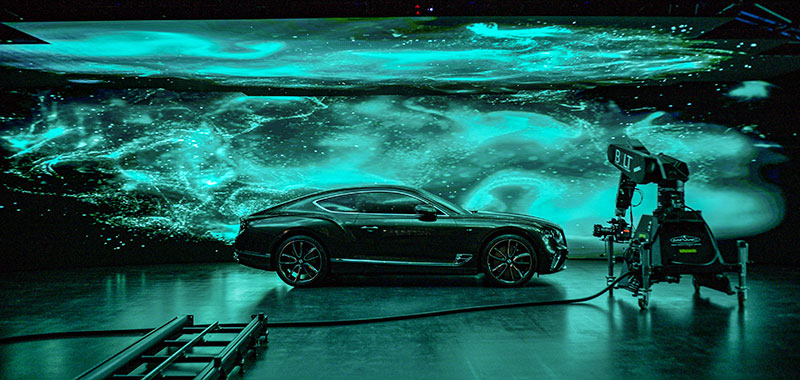

Video producer Henry Sha, operating one of the largest XR stages in the Sydney area, talks about building it up into a place where storytellers turn creative concepts into productions.

With 14 years of video production experience behind him, video producer Henry Sha opened Mushang XR Stage at his production studio in Shanghai in 2021. There, virtual production has been gathering momentum ever since. The team now takes on over 40 projects per year on the XR stage, ranging from commercials to TV shows to insert shots for feature films.

Henry has most recently travelled to Sydney, Australia, to launch Mushang VFX Lab in late 2024. He became interested in Sydney as a location for a new studio after discovering the post production and VFX talent working here. "Assembling a skilled and professional crew is always a key challenge,” he said. “Australia has a long-standing reputation for producing high-calibre VFX films and productions. With that dynamic talent pool available to us, we decided on Sydney.”

Mushang – Looking at the Future of Virtual Production

Since virtual production began to gain widespread popularity about five years ago, the technology has evolved, making the process more efficient and straightforward, which is what Mushang’s clients, who are primarily in the commercial sector, particularly value.

As Mushang VFX Lab continues exploring the Australian market, the team aims to demonstrate the potential of virtual production for local production companies, showing how it can be used to expand a studio’s services. Compared to Shanghai, they have found that local producers aren’t quite as ready to let go of the idea of shooting on location.

However, since opening, the Sydney studio’s projects have received praise from directors, producers and cinematographers. “They recognize it as the future of production, especially in Australia, where crew costs and overtime expenses are substantial,” Henry said. “Virtual production is a smart, efficient answer to those challenges.”

Now one of the largest permanent virtual production volumes in the area, located in Sydney's Alexandria near to the city centre, Mushang VFX Lab has required considerable investment to become a place where storytellers turn their creative concepts into film, broadcast, TV commercials and drama productions.

On our visit to Mushang, Henry’s expertise and experience as a virtual producer was much in evidence. Mushang VFX Lab is built on equipment from Roe Visual, Disguise, Brompton, Stype and MRMC Bolt, among others – which Henry regards as a ‘golden combination’. “It has been instrumental in countless projects, and we're confident it will continue to drive our success for many more years to come," he said.

LED Volume

At 18m wide, the volume is impressive to walk into. The rear walls consist of a curved ROE Visual Black Pearl BP2V2 LED screen measuring 18m x 4.5m, plus a 5m x 4m BP2V2 mounted on a removable dolly, with Carbon Series CB5MKII LED ceiling panels overhead. All screens support 8K video. The BP2V2 LED panels are specifically designed for virtual production applications including large-scale in-camera VFX.

ROE Visual and MUSHANG had collaborated earlier when the Shanghai Mushang XR Stage was created. It has proven to be a differentiator for the studio, contributing to their ability to produce high-quality advertising for well known brands in China including auto manufacturer SAIC, Unilever Global and Henkel group soaps, detergents and adhesives. Those three successful years gave Henry confidence in ROE Visual's support for efficient studio operations.

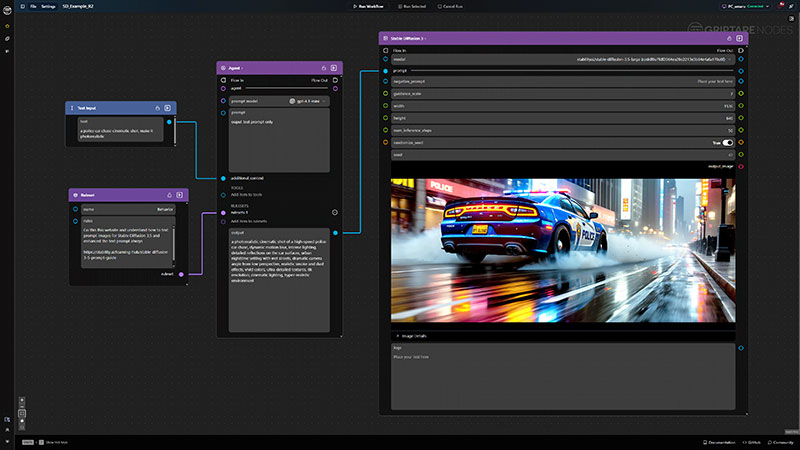

Playback and Rendering

The virtual production system runs on the Disguise VX4 playback server and RX3 render engine server, capable of displaying 8K resolution imagery – enough to take proper advantage of the screens and support accurate natural reflections and dynamic visual effects. RX3 is especially effective for virtual production because of the level of control users have when running complex real-time scenes and hosting third-party render engines. It is driven by disguise’s RenderStream data transfer protocol, which connects to the render engine and supports cluster rendering for working with very high quality video output.

Screen content at Mushang varies widely. As well as high resolution video, local 3D artists in both Sydney and Shanghai create CG environments and assets for projects. Also, the games industry in Shanghai turns out a huge number of titles, many of which are using virtual production, creating a market for Mushang.

Henry talked about the servers in terms of his own clients’ demands. “Commercial projects operate on quick turnaround times, and Disguise’s robust software architecture makes workflows run more smoothly,” he said. “It’s become an industry standard, and after three years of successfully running our studio in China, Disguise has proven its reliability and service quality to the point where we can focus more on studio management.”

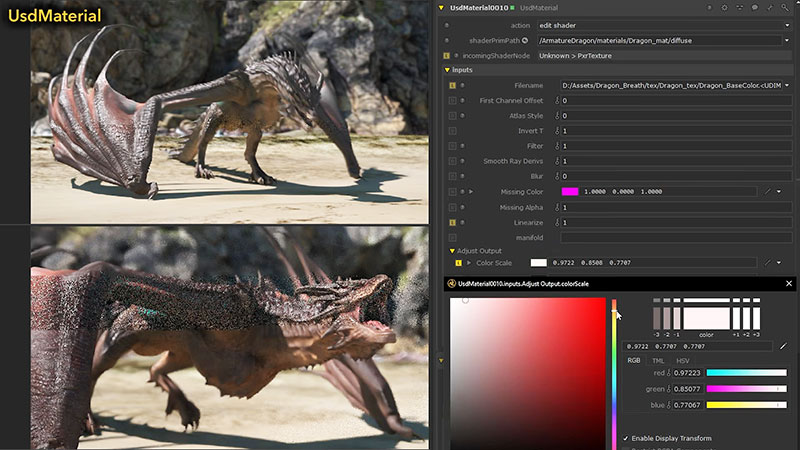

On Set

For shooting in the volume, Sony Venice 2, ARRI and RED cameras are used with Leitz Prime lenses and Cooke Anamorphic FF SF lenses, and ARRI lighting. Henry and his crew use an MRMC Bolt Robotic arm for virtual production control. The Bolt performs precise, repeatable camera movements, which can then be re-used for different shots or effects.

Operators can either control the moves manually, or program complex camera moves to automate consistent, repeated motion. Visual effects may need a series of shots, recorded with identical camera moves, that will ultimately be composited together in post. Likewise, multiple takes may need to be captured with exact camera positions to maintain continuity between digital and physical elements.

Synchronising Production in 3D Space

Mushang uses RedSpy optical camera tracking, developed by stYpe for live broadcast and movie production. Once set up, the RedSpy device requires no recalibration and works with any camera, including handheld. RedSpy detects its own position using infrared LEDs that emit infrared light. This light is reflected back to the camera by reflective markers that Mushang’s team installs on the ceiling, walls or floor of the set location. RedSpy’s small size and LEDs make it almost invisible in most environments and, with the reflective markers, it can be used indoors and outdoors.

Wanting to overcome the traditional optical tracking problem of limited camera cable bandwidth, stYpe programmed FPGAs to compress the 4K image before sending it over cable, which makes the tracking faster, and more responsive and accurate. FPGAs are useful in real-time systems like film production, when response time is critical, whereas response times in conventional CPUs can be unpredictable.

Free movement of camera pedestals and wireless connectivity for steadicam and handheld cameras have been other persistent challenges for camera tracking. RedSpy, however, can send data wirelessly and also be powered by a battery to be completely untethered, which is important for reliable steadicam operation.

Capturing the Camera’s Path

Gaven Lim, a Specialist in Solutions Design for Disguise, described how automating and networking the Bolt robotic arm also allows motion capture of the camera's path and, from there, synchronisation across the entire production system. “As the camera, mounted on the Bolt, moves through physical space, the RedSpy camera tracking device continuously monitors and tracks its precise position and orientation in 3D space. This tracking data includes the camera's XYZ coordinates and its pitch, yaw and rotation,” he said.

“The Disguise media server receives this camera tracking data, usually via the FreeD protocol, and integrates it with the virtual set rendered in real-time by a 3D render engine like Unreal Engine or Unity. Disguise adjusts the virtual background displayed on the LED screens based on the camera's movements, aligning the virtual set accurately with the camera's perspective.

“For example, if the camera moves closer to or further away from the virtual set, Disguise recalculates the appropriate view and updates the LED walls in real time, so that the virtual environment matches the new camera position and angle.”

Synchronisation of all components on the network – from the camera on the robotic arm to the camera tracking system, to the Disguise media server and the LED processors – is maintained frame-by-frame via genlock. The virtual content is then synced across the pipeline using the RenderStream data transfer protocol.

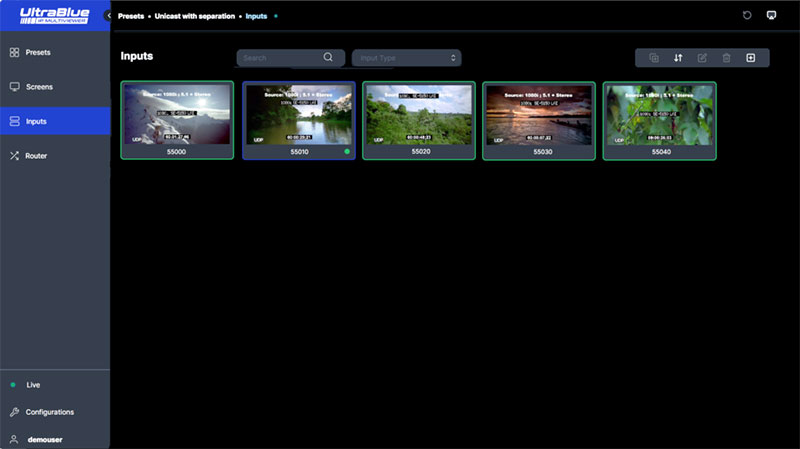

Image Processing and LED Calibration

Image processing is critical, handled by two Brompton 4K Tessera SX40 processors, a Tessera S8 processor plus five Tessera XD 10G distribution units to manage the cabling. The studio's LED setup is calibrated with Brompton's Hydra measurement system. These hardware components have the processing capabilities to maintain full HDR video quality throughout the system.

Brompton processing and ROE Visual’s panels have proven to be an ideal combination for virtual production at Mushang. To get those two systems working together to create a continuous virtual environment for production, accurate calibration and mapping of the LED volume is required. Proper calibration involves aligning the LED panels to eliminate visible seams or gaps and adjusting colour and brightness uniformly across the entire display.

The Hydra measurement system mentioned above works with Brompton's Dynamic Calibration, an adaptive approach that maintains uniformity while getting the best performance from the panels’ LEDs regarding brightness and colour saturation. Conventionally, a fixed, factory-specified calibration is applied to all content, throughout the life of the panel. As a result, panels may be performing below the brightness they are capable of.

Flexible and User-Adjustable

In contrast, Dynamic Calibration is flexible. It takes into consideration the dynamic nature of LEDs and LED panels, and the variations in the content that is displayed on the panels. It can be used to calibrate panels fitted with a Tessera R2 or R2+ receiver card and measured using Hydra. Tessera R2 receiver cards have a Dynamic Engine that processes information in real-time with no added latency – to intelligently determine the best possible way to drive each LED.

For example, extremely bright areas of video content make full use of the LEDs brightness for maximum visual impact, and smooth areas of content are displayed very uniformly for image clarity. The more subjective areas of content, such as skin-tones, are balanced for true-to-life colours. In areas of vivid colour, Dynamic Calibration adapts to use the widest available gamut of the LEDs.

The interactivity of the system means the brightness, primary colours and white point are user-adjustable. What normally requires lengthy recalibration can now be done at any time from the UI on the Tessera processor, with changes reflected in real-time on the screen – even during a live event.

Stable Performance

Since opening in September 2024, commercial projects the studio has completed have used Brompton's Tessera feature set for a range of factors from colour and HDR to camera control, latency, brightness and frame rate. The HFR+ (High Frame Rate) feature, for instance, can be used to play video content on an LED screen at up to 250fps. It gives smoother visuals in applications using HFR content, and makes it possible to shoot slow-motion VFX with high-speed cameras against LED screens and still retain synchronisation between screen and camera.

This ability makes it useful in cinema and broadcast to sync the higher speed cameras with visual effects and virtual sets. "Brompton has been a reliable and steadfast partner for us – in commercial advertising, what we need most is stable performance and efficient application, and Brompton has helped us achieve that," Henry commented. mushang.com.au