Adobe Firefly’s Video Model is updated for motion fidelity video controls and now sound effects. New generative AI models from external developers are accessible now as well.

Generate Sound Effects

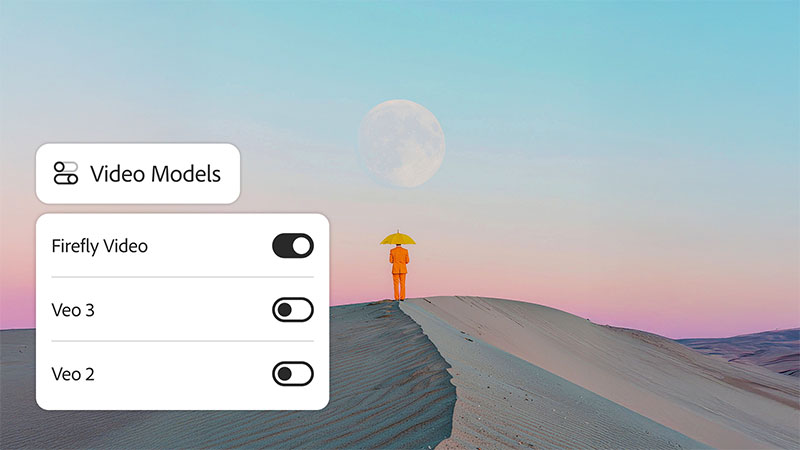

Adobe Firefly’s Video Model has been updated to improve its motion fidelity and video controls, aiming for faster, more precise storytelling workflows. New generative AI models from Adobe’s partner developers are also available within the Generate Video module of Firefly. Users can choose which model works best for their creative requirements across image, video and sound formats.

Also, new workflow tools give users more control over the final video's composition and style, including the ability to apply custom-generated sound effects directly inside the Firefly web app. This creates an opportunity to start experimenting with AI-powered avatar-led videos, for example.

On Firefly for web, Enhance Prompt is a new Generate Video feature designed to produce better, quicker results. Enhance Prompt takes the original input and adds words that may help Firefly better understand the creative intent by, for example, removing ambiguity or clarifying direction.

External Models now Available in Firefly

Adobe Partner Models

Among the primary uses of the Video Model in Firefly is generating dynamic landscapes, from natural scenes to urban environments. The model also demonstrates good performance with animal motion and behaviour, atmospheric elements like weather patterns and particle effects, and 2D and 3D animation. The Video Model's improved motion fidelity means users’ generated video can now be expected to move more naturally, with smoother transitions and realism.

Because creators want to experiment with different styles, Adobe is continuing to expand the range of other models accessible inside the Firefly app by adding those of partner companies. Recently, Runway's Gen-4 Video and Google Veo3 with Audio have been added to Firefly’s Boards module (for generating mood boards), and Topaz Labs' Image and Video Upscalers and Moonvalley's Marey will be launching soon. Generate Video also features Veo3 with Audio. Luma AI's Ray 2 and Pika 2.2, which are already available in Boards, will soon be added.

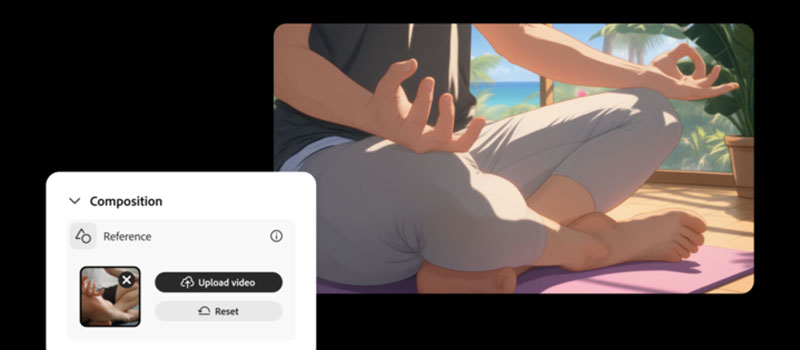

Composition Reference

The intention here is for the user to go from storyboarding to fully animated video outputs inside Firefly, choosing the AI model that best fits each part of the workflow. This helps to keep the artistic style consistent and creative control intact.

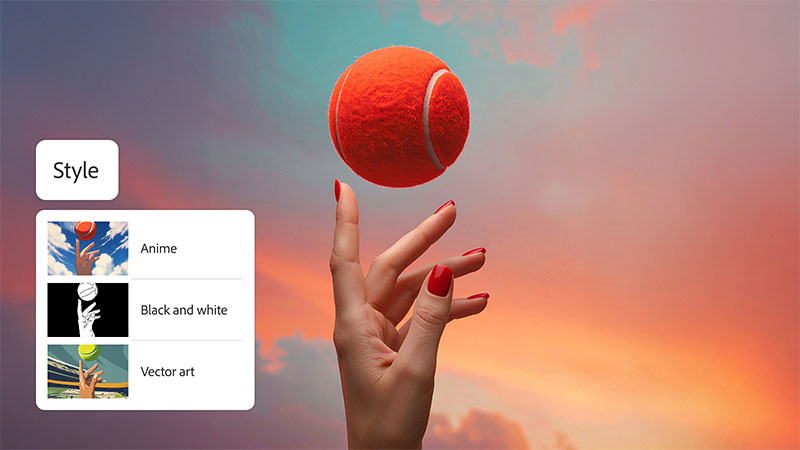

Video Controls

Firefly also has tools for controlling structure and consistency in videos, for example, selecting vertical, horizontal or square aspect ratios to match the project’s format without requiring extra editing. To help with composition, users can also upload a reference video, describe their intention and allow Firefly to output a new video that transfers the original composition to the generated content.

A certain number of stylistic presets are included such as claymation, anime, line art or 2D that will immediately set the tone. They are useful for concept pitches, creative briefs or pulling elements together before finalising output.

Style Presets

Automated cropping tools may also help maintain a vision, and save time. Users upload their first and last frames, select how the image will be cropped (landscape, portrait, close-up, long), describe the scene and Firefly will generate a video, in which the frames in between fit the same format. This makes jumping between tools unnecessary.

Composition Reference, Style Presets and Keyframe Cropping are the first in a planned series of stylistic tools for Firefly, to be rolled out over time.

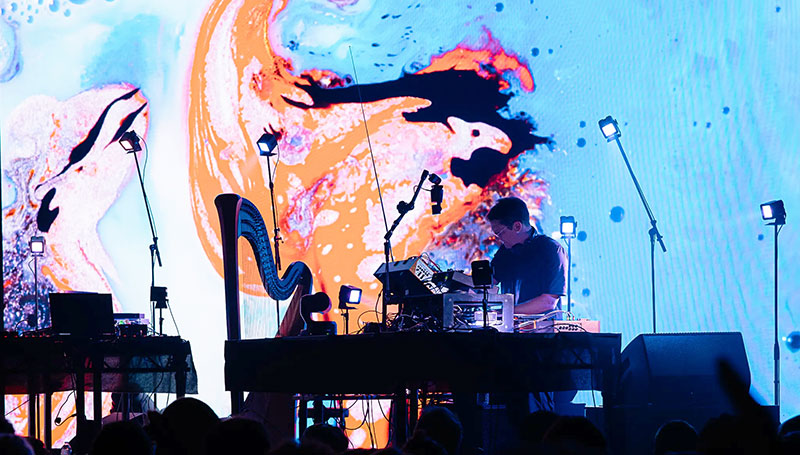

Sound Effects and Virtual Avatars

Generate Sound Effects (beta) is a new tool that layers in custom audio using a prompt. Audfio can add cinematic qualities to scenes that need emotion or energy. For more control, the user’s own voice can set the timing and intensity of the sound. Firefly listens to the intensity and rhythm of that voice to correctly place sound effects, matching the action in the video to cinematic timing.

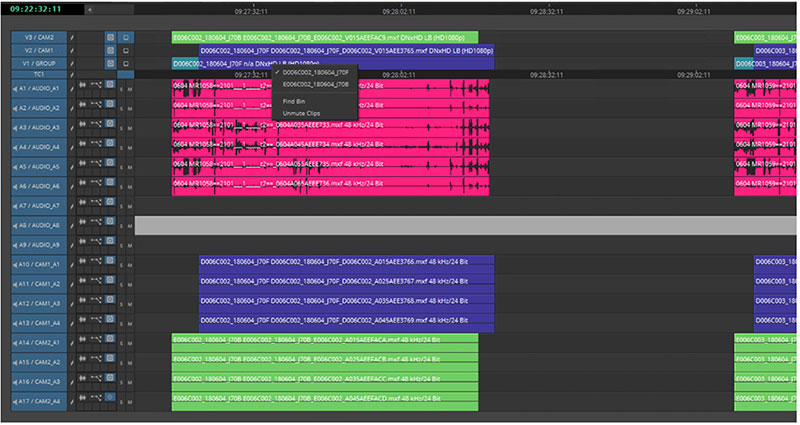

Completed videos are ready for exporting directly to Adobe Express to finish the content to a standard suitable for publishing to social channels, or exporting to Premiere Pro to insert the video into an existing timeline.

![]()

Text to Avatar

Text to Avatar (beta) turns scripts into avatar-led videos. Users have a library of avatars to choose from, then customise the background with a colour, image or video, and select the accent that best fits the project. Firefly generates the video from there. This tool is useful where speed and consistency are important, when delivering at scale.

Typical applications for Text to Avatar are designing clear, engaging video lessons or FAQs with a virtual presenter, turning blog posts or articles into scalable video content for social media, or developing internal training materials with a human feel. firefly.adobe.com