Chaos puts Project Arena’s Virtual Production tools to the test in a new short film, achieving accurate ICVFX with real-time raytracing and compositing. Christopher Nichols shares insights.

In September, Chaos Group produced and released Ray Tracing FTW, a short film of the Wild West-bank robbery genre that looks into the art and practice VFX and filmmaking from an artist's point of view. This film is not only very funny – full of inside jokes about green screen, ‘fixing it in post’ and robots – but also serves as the first major test for an upcoming set of virtual production tools that Chaos has been developing called Project Arena. See the film here, and a BTS film here.

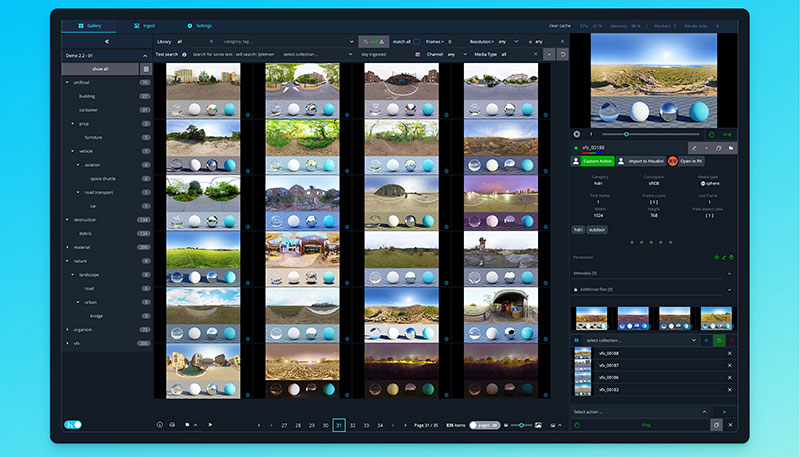

Chaos Innovation Lab bases these new tools on Chaos’ real-time ray tracing software Vantage, which is used to explore complex V-Ray 3D production scenes, looking at potential applications of Vantage for in-camera visual effects (ICVFX) on virtual production stages.

When Simpler is Better

Christopher Nichols, director of special projects at the Chaos Innovation Lab, took the role of VFX supervisor/producer on Ray Tracing FTW. "Technology is too often a distraction on set. It crashes, doesn't respond fast enough, or requires too many specialists to make it work," he said. "We've been developing Project Arena to change all that, so everyone from the artist to the DP can stop thinking about the technology, and get back to the natural rhythms of filmmaking."

Now in preparation for release as a product, Project Arena works as a faster, simpler alternative to game engines. With Project Arena, artists can move V-Ray assets and selected animations to LED walls in around 10 minutes, without first preprocessing and while accessing real-time ray tracing with pipelines they are familiar with. Real-time on-set adjustments are also possible, and because the tools are also ready for production, the artists can continue to work with the same assets from pre- to post-production.

Since teams use the same V-Ray assets across all production stages, continuity is also assured and the pipeline becomes simpler. Duplicating work, asset conversion or compensating for changes in quality is unnecessary, lessening the need for virtual art departments. Overall, the goals are to increase efficiency, reduce production costs, and make virtual production more accessible.

Ray Tracing FTW – The Background Story

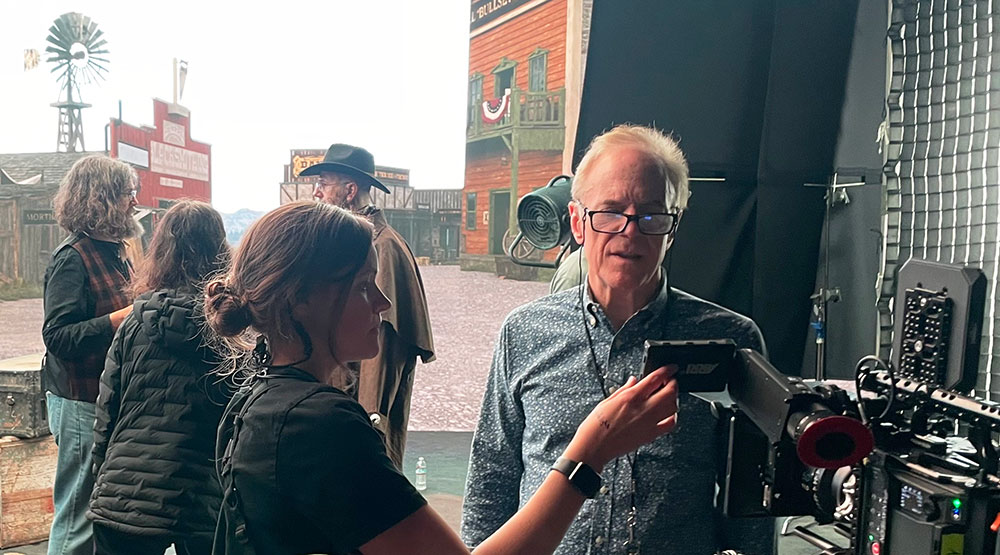

Project Arena brought over 2 trillion polygons to the screen for Ray Tracing FTW, which was shot at Orbital Studios in LA. Over three consecutive days, the team was able to avoid distracting, time-consuming crashes that frequently occur when using game engines in virtual production. Having a robust, consistent system helped the team forget the gear and achieve about 30 set-up shots during a standard 10-hour shoot day. Christopher worked with Director Daniel Thron, who is also a writer and VFX artist, and cinematographer Richard Crudo as director of photography.

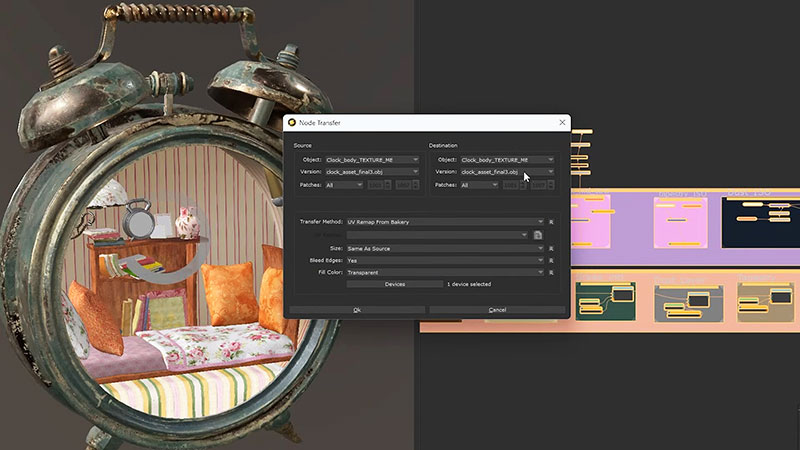

Filling the LED screen on set was a massive V-Ray environment of an Old West town, designed by Erick Schiele and built by The Scope, specialists in creating purpose-built 3D motion backgrounds, with the help of KitBash3D and TurboSquid assets. The production continued to use this environment on everything from all-CG establishing shots and tunnel sequences, to the background for a physical train car set, which conveyed subtle story and location details due to the use of full ray tracing.

Saving Steps

Christopher Nichols commented that, with Project Arena, backgrounds and 3D environments are prepared much as they would be for a traditional post pipeline using 3D asset software like Maya, 3ds Max, Houdini or Blender. “The difference is that the assets don’t need to be converted for a game engine, which can take weeks depending on the kind of assets,” he said. “Most assets can be saved as vrscene files – the native V-Ray file format file that comes with V-Ray for Maya, V-Ray for 3ds Max and so on – and those files can be loaded directly into Project Arena."

Without the conversion step to manage, most 3D artists are actually more qualified to use Project Arena than game engines. All tasks can be completed in Project Arena, bypassing the game engine while minimising latency and dependence on other applications.

“If you look at the bare minimum that needs to be provided for ICVFX with an LED wall, you still need a way to sync real-time rendering across multiple nodes and a camera tracker to render out the frustum (viewing region) of the virtual camera,” said Christopher. “This is exactly what Project Arena does, and combining it with Vantage adds real-time rendering.

“By removing the game engine, we also drastically reduce the overhead needed, the conversion process, the need for a new complex pipeline and the need to train or hire specialised real-time artists. That’s not even counting the massive overhead inherent in a game engine that is only needed for a few simple tasks.”

On the Practical Side

Meanwhile, the practical set elements, including the elaborate bank counter, were assembled specifically for use in front of the LED wall, giving the director and DP the ability to light the shots perfectly. The train carriage was designed as a modular set piece, allowing it to be pulled apart for certain shots and repurposed.

About lighting more generally, Christopher said, “The lighting and shaders are set up by the 3D artists in their preferred application. Once loaded in Vantage, everything will come in as it was set up in the original 3D software. Then, when loaded into Project Arena for virtual production, it is still possible to move and change the lighting in real-time, giving control to the director, DoP and operators.

“As with all ICVFX, the wall itself cannot provide all the necessary physical lighting, so you still need a lighting crew to be part of the production. We are currently working on providing additional control of the physical lighting inside of Project Arena, as with DMX lighting, but this is still in the works.”

Being able to blend detailed CG models invisibly with physical sets meant the production could capture nearly every shot in-camera – except of course for the beautiful digital train crashes produced by Bottleship VFX. It also meant that when further assets were suddenly needed – like a 3D hacienda for the final shot — the crew could purchase one online and have it ready for the screen in under 15 minutes.

Creative Compositing

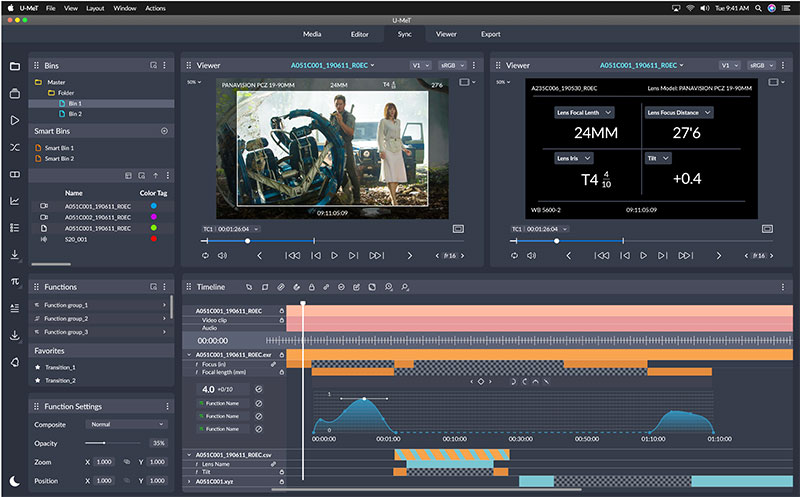

To achieve an old Western look and feel, Richard recorded the project on an ARRI Alexa 35 with Panavision PVintage lenses to replicate the original Ultra Speed lenses used during the 1970s. He shot mainly in the LED volume, but commented that he was continually impressed by how genuine the results looked — even to the naked eye.

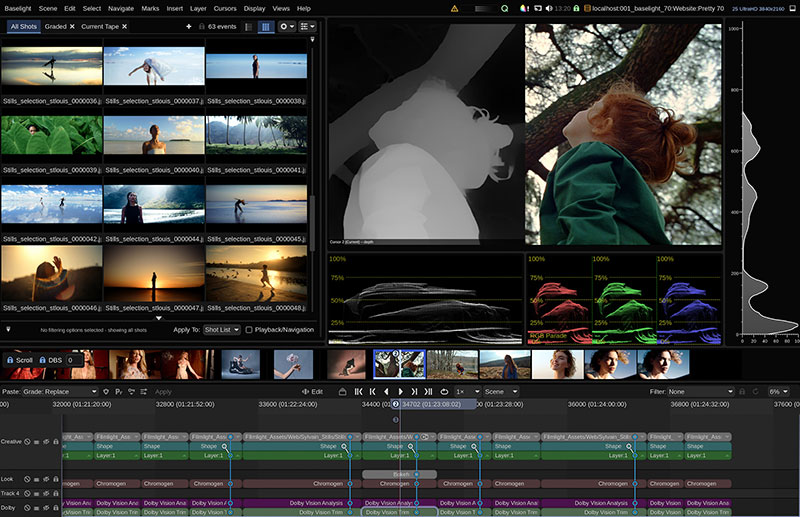

“It was so clear how much quicker and more efficient Project Arena made the compositing process, with a greater opportunity for creativity during the shoot," said Richard Crudo, the DP. "By carefully managing the colour and density values of the volume with the live foreground action, I was able to bake in the exact look I wanted. The special effects supervisor could also see the look his team was aiming for.”

Further to this ability for the DP to tap into compositing while on set, Christopher said he believes the relationship between filmmaker and the VFX team should be closer, close enough to allow the 3D artist to respond quickly to requests. He said, “Frequently, productions using game engines will send work that needs revising back to 3D artists, who then make changes in their 3D application, convert it to the game engine and finally rebake the lighting to reflect those changes. This could take a day or more to turn around.

“With Project Arena, the 3D artist could be on set, just like any other member of the art department, and make the requested changes right in the 3D application,” said Christopher. “Then they would write out a new vrscene file and load it into Project Arena, which would only add a further 15 minutes on top of the changes inside the 3D application. If the changes only affect the lighting in the scene or positions of certain objects, since all lighting is fully ray traced and real-time, those changes can also happen live and in real time in Project Arena.”

It’s also interesting that, since Project Arena is itself an extension of Vantage software that makes it work on LED walls, a scene created in Project Arena can be loaded into Vantage, where the director or DoP is free to explore it. A solid computer and new graphics card are all that is needed.

Ready for the Next Revolution

"In the 1960s, the studios were in trouble. Audiences were tired of the same old stuff," said Daniel Thron, director and writer of Ray Tracing FTW. "But then you got this wave of young filmmakers coming in with smaller cameras that allowed for naturalistic photography and acting, and that revolutionized Hollywood. We have the opportunity to bring that same sentiment to the next revolution in filmmaking."

The Chaos Innovation Lab is currently seeking production feedback on Project Arena. An important step is making sure the software will work for productions in nearly any type and configuration of LED volume, which involves visiting and testing the software on the stages at various virtual production facilities. Christopher said, “The main two differences we find is how the different studios have their panels organised, and what tracking system they use for their cameras. Besides that, most are very similar. They use similar computers, top of the line GPUs and Quadro Sync cards.

“But those critical differences mean we still need to set up Project Arena for that system. We are currently looking at finding the simplest way to set up Project Arena and get it working for people right away. As of now, it usually takes us about one day to set up.” www.chaos.com

Below, you can see stills from the film that show how the background asset appears in a range of different shots.