AI video search company Moments Lab formed an integration with Bitcentral, enabling Bitcentral Oasis MAM users to augment media assets with effective, AI-generated metadata.

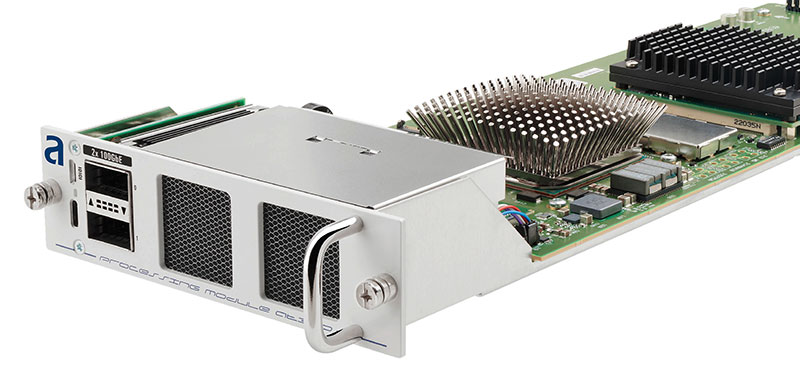

Moments Lab MXT 1.5

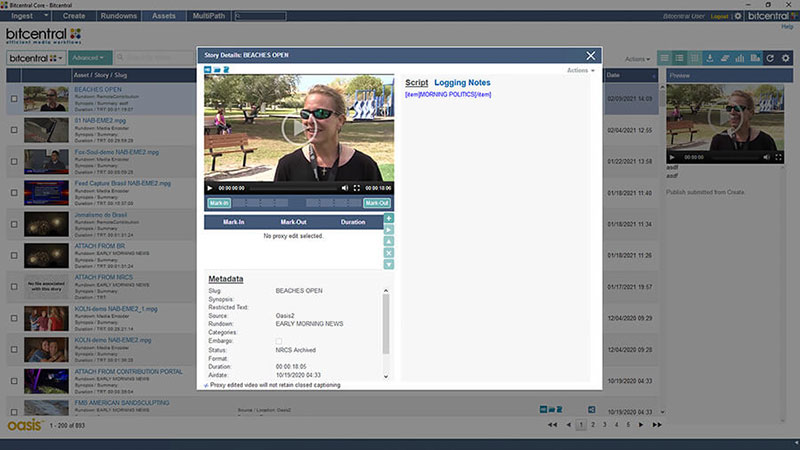

AI and video search company Moments Lab has formed an integration with Bitcentral enabling Bitcentral Oasis MAM users to augment their media assets with effective, AI-generated metadata while retaining their existing on-premises or hybrid storage architecture.

Moments Lab’s public API can be used to integrate its MXT-1.5 multimodal AI engine (imagery, text, audio etc) with Oasis asset management. MXT-1.5 combines what it sees and hears in videos to generate rich metadata, including human-language style descriptions of what is happening in each shot and sequence.

Bitcentral’s collaborative Oasis MAM software is typically used in media workflows for news production, with field-centric tools that connect remote teams directly with TV studios to push stories through production more quickly.

Frederic Petitpont, Moments Lab co-founder and CTO, said, “Integrating our multimodal AI indexing system with Bitcentral’s Oasis MAM puts enhanced content search and discovery at news teams’ fingertips. It means they’ll be able to find the media assets they need almost immediately, to build and publish stories faster than ever.”

Sam Peterson, COO at Bitcentral, said, “We’re excited to add Moments Lab’s stand-out AI indexing tools to our Oasis MAM for customers and deliver further efficiency to news production workflows.”

Moments Lab’s API works by analyzing media files – proxy or original – from on-premises or cloud storage. Media assets are processed and enriched by MXT-1.5, which detects faces, text, logos, landmarks, objects, actions, context, shot types and speech to generate a semantic, human-recognisable description of the content for increased searchability. The output can be reviewed and validated in either Moments Lab or directly in the Oasis MAM. www.momentslab.com