‘Afterglow’ was shot on CUBE Studio’s virtual stage while Brompton LED processing synchronised screen-to-camera interaction, for real-time control over colours and brightness.

Towards the end of 2024, director Arvind Jay produced his new sci-fi short, Afterglow, at CUBE Studio’s Virtual Production stage in Berkshire, where he designed and realised an immersive space environment essential to the film’s vision and story.

Arvind comes from Singapore and now lives in the UK. The film mirrors his concerns about leaving a legacy, both in his work and his family life. “My parents worked hard to send me to the UK,” he said. “I want them to understand and appreciate my work.” The story follows a man grappling with loss while striving to create a better life, exploring themes of letting go and leaving behind a positive impact.

Arvind learned and refined his filmmaking skills at Arts University Bournemouth (AUB), where his graduation film Stargazer met with success and was sold to DUST, a platform for sci-fi films. After the success of Stargazer, he embarked on Afterglow, supported by AUB’s Futures Fund, which provided financial backing and access to professional-level equipment.

Virtual Production at CUBE

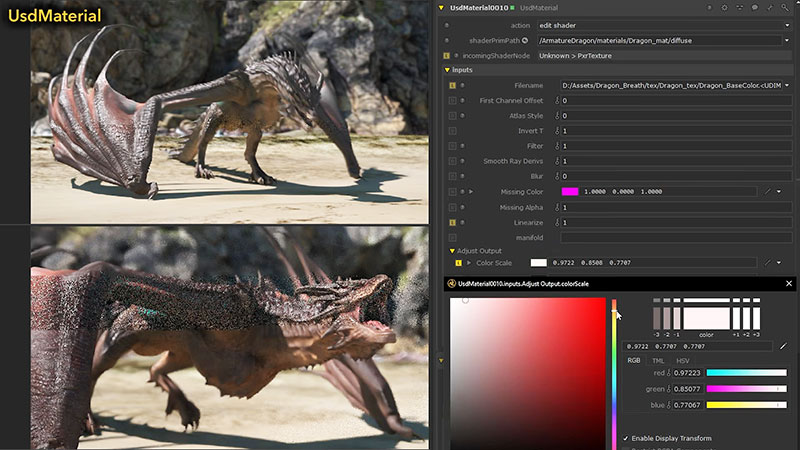

CUBE Studio’s 10.5m by 5.5m Unilumin UpadIV LED volume wall is powered by a Brompton 4K Tessera SX40 LED processor, with three Tessera 10G data distribution units supporting the setup. To create and control the virtual environments that define the story’s sci-fi setting, Arvind’s team used Unreal Engine 3D software and AI visual generation tools for the content.

Brompton’s LED processing synchronised screen-to-camera interaction, allowing them to shoot with precise, real-time control over colours and brightness.

For Arvind and his team, this project was their first experience working in a virtual production environment. CUBE Studio’s team, including Creative Producer Owen Riseley, guided them through the process. Brompton LED processing played a role as the bridge between the camera and the screen. No camera tracking was used in this project – instead, the team focused on how movement – both on set and in-camera – would align with the VFX.

Invisible Tracking

“Brompton was used to isolate specific parts of the screen by projecting tracking markers on individual panels,” said Owen. “This allowed our VFX Supervisor to composite elements precisely during post-production, saving time and giving the VFX team a level of flexibility they don't typically have.”

Accurately tracking the position of the camera is critical to virtual production. Typically, visible markers, such as reflective markers, are placed on the set and captured in camera, but this may not suit LED volumes where no surfaces are available to place markers on. SX40 processors can solve this by overlaying markers on the video content being displayed on the LED screen itself.

Frame Remapping is used to display the markers only on output frames that are synchronised against the camera’s shutter speed, and are not visible to the main camera, preventing the markers from appearing in shot or in reflections.

Total Synchronisation

Owen commented that integrating new techniques like these, both technically and creatively, helps people understand them as a catalyst for creativity. “It opens doors and opportunities that weren’t possible before,” he said. “On this shoot, we managed the pipeline to ensure the bit depth and dynamic range needed to replicate a space environment. With Brompton, the technology becomes almost invisible, but it’s a critical part of that process. Brompton also helped us synchronise everything, from the VFX pipeline to tracking markers, so everything worked in unison.”

The use of LED screens in the VP studio also made the filming process easier for the actors. Arvind could place the actors directly into the actual scene, unlike a green screen approach, which requires actors to imagine their surroundings during filming. With live editing viewable on the LED screen, the cast could see the unfolding action and immerse themselves in the space.

Consistent Images – Panels and Bit Depth

Owen found that the Brompton LED processors consistently delivered the best-possible image output at any given moment. He said, “Tessera software features like Extended Bit Depth and PureTone, as well as enhanced functionality for camera inputs, shutter speeds and trackers helped us simplify the production process.”

Extended Bit Depth typically extends dynamic range by two to three stops on camera, an advantage when filming an LED screen displaying dark, shadowy content. It can accurately display HDR material that contains ultra-low brightness levels by bringing out additional detail in dark areas of the image without affecting peak brightness of the panel – HDR highlights are still displayed to their full potential.

The additional range can also be used to achieve brighter, more realistic lighting – the camera exposure can be increased substantially when using Extended Bit Depth, making the panels, and any lighting from them, appear much brighter on camera.

PureTone compensates for different panel types that show a colour cast in darker greys or when using the panels at low brightness levels, interfering with accurate colours and true neutral greys. Also, LEDs and their driver chips are not perfectly linear, meaning that when expected to output a particular brightness, they may not accurately achieve it. Panels that appear to be matched when displaying full brightness test patterns, can appear to be unmatched later on when displaying real content with low-light areas.

“All these little things save time and reduce hassle,” Owen said. “For example, being able to add trackers on specific shots and knowing we can place them without causing unnecessary reflections or post-production complications is a truly useful resource.” www.bromptontech.com