XR Studio WLab is dedicated to expanding the use virtual production, opening opportunities for first-time virtual production filmmakers to gain skills, experience and access to resources.

New York City XR Studio WLab is dedicated to expanding the use virtual production, opening opportunities for first-time virtual production filmmakers to gain skills and experience in this field, which is still relatively new and in limited use.

WLab sees the use of virtual production evolving rapidly at studios such as Disney, Amazon and Sony, but finds that it remains a challenge and a luxury for students, artists and independent creators. “We questioned why such a critical and transformative aspect of modern filmmaking should only be accessible to a privileged few, and how we could resolve this imbalance,” said WLab’s Co-founder, Tommy Wu.

With a team of passionate filmmakers from New York University (NYU), School of Visual Arts (SVA), and Savannah College of Art and Design (SCAD), WLab’s vision hasn’t been confined to overcoming cost barriers preventing emerging artists from accessing tools and expertise, but also to expanding the applications of virtual production in filmmaking. They also aim to develop comprehensive education opportunities.

Since the studio opened, WLab has partnered with NYU’s Tandon School of Engineering, 'Tandon at the Yard' at the Brooklyn Navy Yard. “This partnership allowed us to facilitate workshops and demos and to open studio times for students from NYU, Parsons School of Design, the School of Visual Arts and the Brooklyn Steam Center,” Tommy said. “Moreover, we launched an in-house grant programme, empowering first-time virtual production filmmakers to bring their visions to life on our screens.”

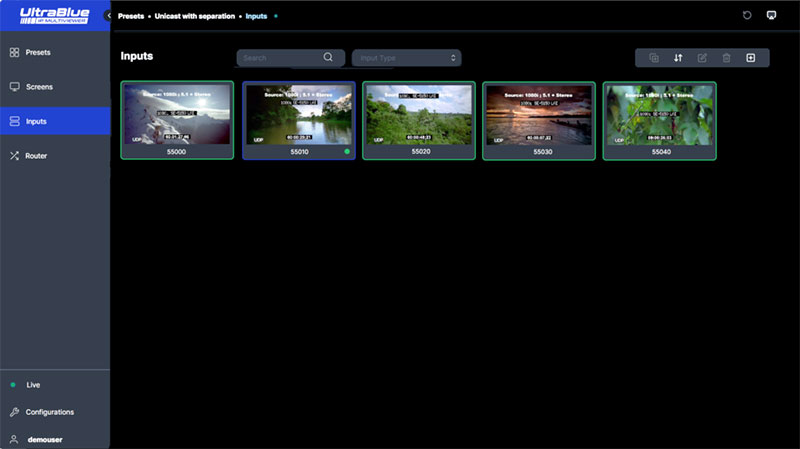

Video Processing and Display

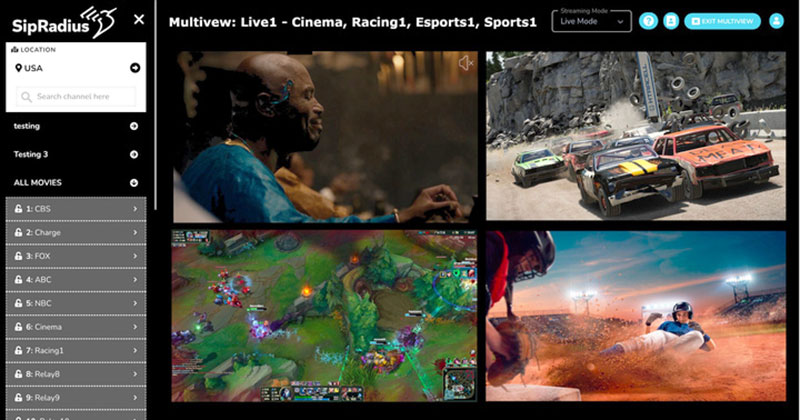

Today, WLab houses a 9m by 3m curved LED wall with a pixel pitch of 2.3mm. The display consists of 132 of the Pixreal Avatar 2.3 panels arranged in a 22 by 6 configuration. The LED wall is powered by two Brompton Tessera S8 LED processors for visual quality and performance.

Tessera processing features support for HDR and Dynamic Calibration (more information below) as well as for extended bit depth and high frame rate. Stacking can also be used to control multiple processors as a single unit. The S8 model accommodates full 4K60 input, with eight 1G outputs, each capable of 525K pixels at 60Hz, 8 bits per colour, and has a up/down scaler that matches the source to the screen.

It maintains ultra low latency of 1 frame, making it easier to synchronise live action with visual effects, virtual sets and cameras. High frame rate compatibility allows video content to play on an LED screen at up to 250 fps while controlling motion artefacts and input lag in fast moving content.

Screen Calibration

The screens have also been calibrated with the Hydra measurement system to make them Brompton HDR-ready. The Hydra system uses Brompton’s Dynamic Calibration system to calibrate each area of the panel. Typically, a fixed, factory-specified calibration is applied to panels that is used over their lifetime for all content, which may prevent panels from performing at the brightness they are capable of.

Dynamic Calibration is more flexible. It works with Brompton R2 receiver cards and processes image information in real time, without adding latency. It intelligently determines the optimum way to drive each LED for the images in use.

The dynamic approach means the panels can adapt, for instance, to images with extremely bright areas by making full use of the LED’s brightness. It adapts to vivid areas by accessing the maximum available gamut of the LEDs. Smooth areas of content are displayed with precise uniformity for greater clarity, and sensitive regions such as skin tones are balanced for a natural look. Meanwhile, Dynamic Calibration’s colour processing keeps the video content looking uniform, out to the extremes of brightness and colour gamut.

Camera Tracking

The new studio employs up-to-date, simplified camera tracking with Vive Mars, capturing motion and interaction precisely. Mars consolidates the complete camera tracking workflow into a compact system that makes pipelines faster and more cost-effective. It uses existing VIVE VR tracking, and combines it with gen-lock and timecode support, required factors for professional productions.

Regarding lens calibration, often the most time-consuming aspect to finalise, Mars also tracks the calibration board, adding flexibility and fault tolerance to this task. VIVE Mars CamTrack supports up to three tracking devices on set to track the camera, props and lights that will shine in both the physical and virtual world.

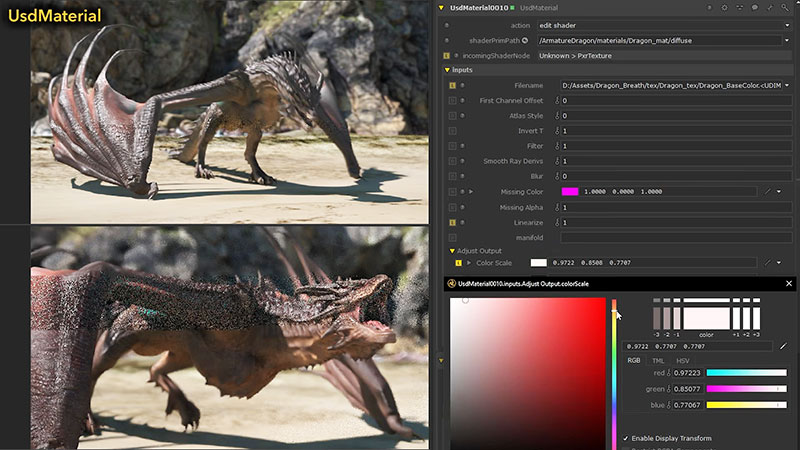

The studio set-up includes a RED KOMODO camera, Unreal Engine for real-time 3D creation and an NVIDIA A6000 graphics card, all of which contribute to Wlab’s ability to produce immersive, interactive experiences.

Rayne Feng, Sales & Marketing Director at Pixreal, said, “We were excited to partner with WLab for their New York City XR Studio. With virtual production generating such change in filmmaking, accurate lighting, colour and reflections are essential for creating ultra-realistic visuals on LED screens that blend invisibly with physical reality. Our Avatar series of panels was designed for virtual production applications, featuring a system and hardware design that delivers extremely accurate colour rendition, pinpoint greyscale accuracy and HDR capability.”

About WLab’s commitment to production services and nurturing the future of virtual production, Tommy notes that, having established themselves as a full-service virtual production hub, they can now focus on making these resources available to artists, students and productions of any size throughout New York City. www.bromptontech.com