Maxon Cinebench 2026 Assesses New NVIDIA, AMD and Apple Hardware

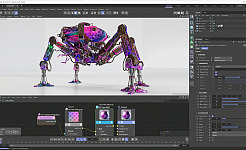

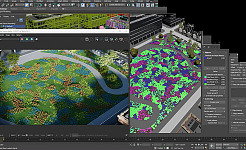

Maxon's new release of Cinebench is updated to the most recent Redshift Rendering engine and adds support for the new NVIDIA and AMD GPUs, and M4/M5 Apple Silicon processors.

VFX

Maxon Cinebench 2026 Assesses New NVIDIA, AMD and Apple Hardware